- Google’s SGE integrates LLMs like Gemini into Search to generate contextual, citation-backed overviews above traditional results.

- SGE shifts user behavior from link navigation to answer synthesis, challenging SEO, publisher visibility, and ad monetization.

- Google applies safety layers, source citations, and AI principles to reduce hallucinations, bias, and misinformation in generative answers.

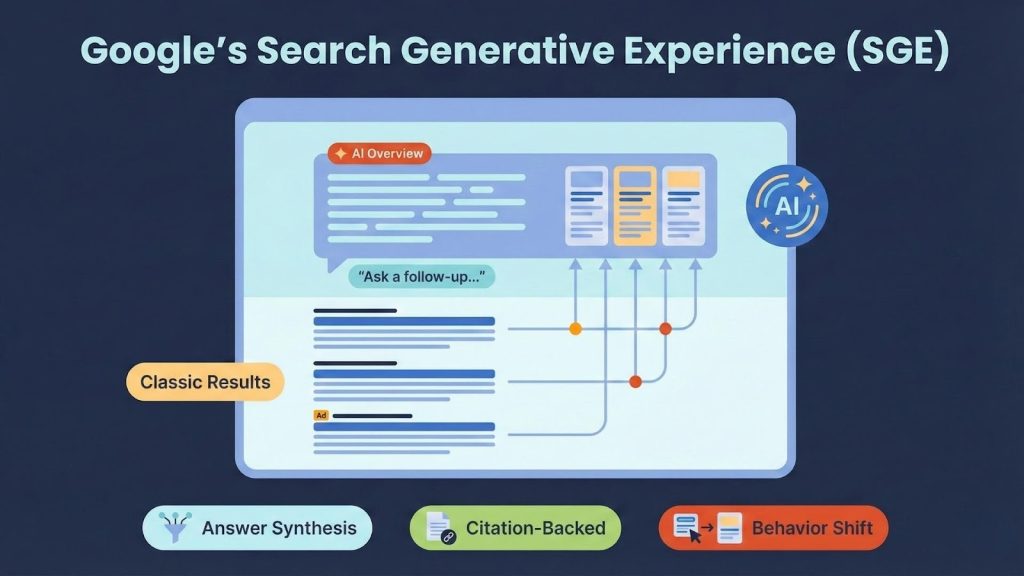

When Google began integrating large language models into its core search product through the Search Generative Experience (SGE), it wasn’t just shipping a new feature; it was rewriting the rules of how people find, interpret, and interact with information online. Rather than presenting users with a ranked list of links, SGE synthesizes knowledge across sources to generate contextual overviews, citations included. It’s a fundamental shift: from directing users toward content to delivering answers in-place, powered by models like PaLM 2 and Gemini, all running atop Google’s traditional search infrastructure.

This evolution has profound implications for how we approach SEO, content strategy, and marketing in general. As the line between search engine and AI assistant blurs, brands must adapt to remain discoverable, trustworthy, and contextually relevant, requiring much tighter alignment between content creation, search intent, and visibility goals, despite not always owning the top result slot. In this In-depth analysis, I break down how SGE works, why it matters, and what it means for businesses, marketers, and the future of search as a whole.

Understanding Google’s Search Generative Experience (SGE)

What Is a Search Generative Experience and Why It Matters

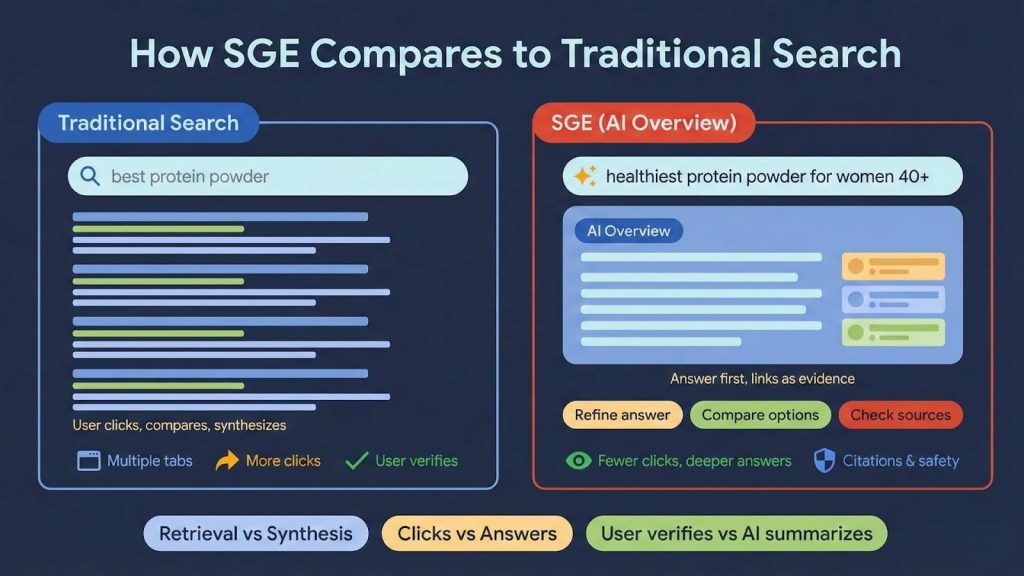

When Google launched the Search Generative Experience (SGE) in 2023, I saw it as more than just a feature; it marked a redefinition of what search engines are expected to do. Traditionally, search was a retrieval task. You entered keywords, and Google returned a list of links. With SGE, Google made a bold move: it embedded a generative large language model (LLM) into its core search product.

SGE doesn’t just fetch links; it generates AI-powered overviews that synthesize insights from multiple sources in Google’s index. It contextualizes those results and anticipates follow-up questions. This changes the nature of search: users don’t just search for information, they start conversations with it.

The questions people ask are evolving, too. Instead of “best protein powders,” users now search for “what are the healthiest protein powders for women over 40 with iron deficiency?” SGE is built to handle that complexity, and it’s reshaping user expectations accordingly.

The Motivation Behind SGE

SGE wasn’t built in a vacuum. The momentum behind tools like ChatGPT and Bing Chat in 2023 forced Google to show that it wasn’t just keeping up, it was redefining the game. But the company approached it differently. Rather than launch a chatbot and staple it next to search, Google wove the generative model into the search results page itself.

This tight integration with Google’s indexing, ranking, and safety infrastructure gives SGE unique strengths: freshness, factual grounding, and transparency through citations. It wasn’t just a technological leap; it was a product philosophy shift grounded in two decades of Google’s search engineering

The Technical Foundations Behind Search Generative Experiences

Which Models Power It?

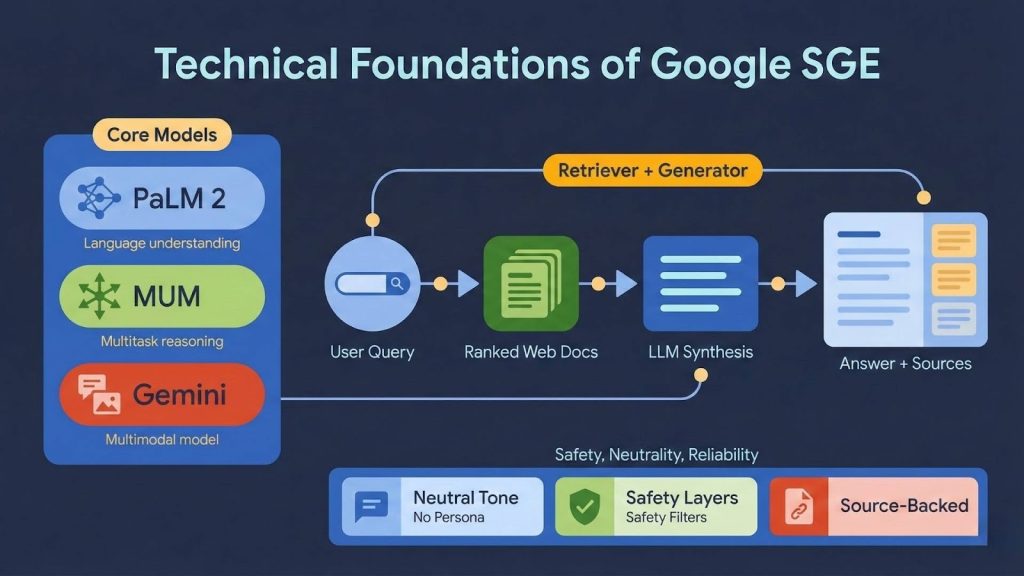

At launch, SGE was powered by PaLM 2–based models and other search-tuned generative systems.These models were tuned to work alongside Google’s traditional search stack. Later, SGE adopted Gemini, Google’s next-generation multimodal model that handles text, images, and code more fluidly than its predecessors.

This multi-model setup gives SGE a competitive edge. PaLM 2’s strong language understanding is balanced by MUM’s multi-task capabilities (e.g., search + translation + reasoning), while Gemini adds image handling and multi-modal context.

How Is It Integrated?

Unlike standalone AI systems, SGE operates within the constraints and advantages of Google’s core search architecture. That means every generative response is grounded in real-time index data, web pages, and quality signals. It’s not pulling solely from model memory; it’s citing actual sources.

Google uses an internal retriever-augmenter architecture. The user’s query is first interpreted by the LLM, then paired with ranked web documents, and the generative model crafts an answer based on those. Importantly, responses include citations, which can be expanded to show supporting sources, not the exact origin of each sentence.

Responsible Training and Safety Constraints

SGE was not trained to be a chatbot. In fact, Google intentionally fine-tuned its models to avoid generating content in the first person or reflecting any persona. It avoids emotional tone, opinion, or bias by design. The aim is to provide neutral, factual, source-backed summaries, rather than engaging in personality-driven interactions.

The models also underwent adversarial testing and red-teaming focused on issues like misinformation, bias, harmful content, and hallucinations. That continues today as part of Google’s ongoing evaluation loop, aligned with their publicly published AI Principles.

Core Features and Functional Capabilities

AI Overviews and Summaries

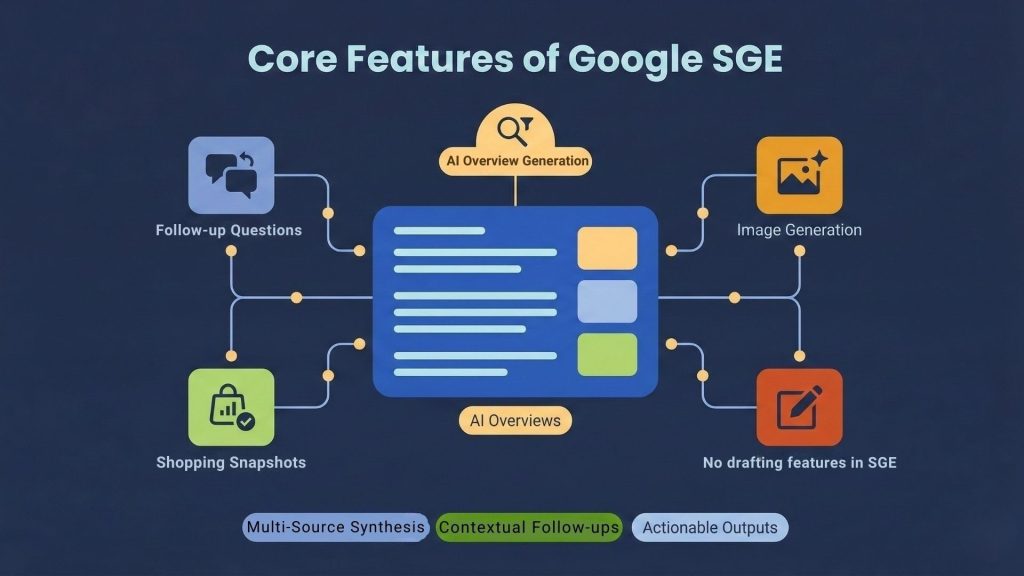

The centerpiece of SGE is the AI Overview: a multi-paragraph generative summary that appears at the top of the results page for certain queries. These summaries extract and combine insights from diverse web sources, which are cited inline or alongside.

This isn’t a “featured snippet” or copy-pasted paragraph. It’s a generated synthesis, often including comparisons, pros and cons, or decision-support phrasing, like a research assistant compiling a literature review.

Conversational Refinement

SGE doesn’t stop at a single answer. Users can tap suggested follow-up questions or type their own, and the system keeps the context of the original query. This creates a pseudo-conversational search experience without forcing users into a separate chatbot interface.

Contextual understanding is key here. Ask “best hiking backpacks under $150,” then follow with “which of those is best for winter hikes?” SGE understands the referent and provides a refined answer.

Multimodal Generation

Google added support for experimental image generation in Search Labs. For users over 18 in the US, queries like “draw a cat dressed as an astronaut” will return up to four AI-generated images. These come with watermarks and metadata indicating they were AI-created, a move that reflects Google’s commitment to transparency.

Shopping Intelligence

SGE excels in product discovery. Its shopping snapshots integrate specs, prices, and reviews, pulling from Google’s vast Shopping Graph. The AI doesn’t just list products; it breaks down what features matter and why, creating a buying guide experience within the search results.

Draft Generation

Another newer feature: content generation. Draft writing is provided by Gemini in Workspace, not SGE on command. Ask it to draft an email to a contractor about converting your garage into an office, and it will do so. You can export it to Gmail or Docs, and even adjust tone and length.

User Experience and Interface Design

The Front-End of Generative Search

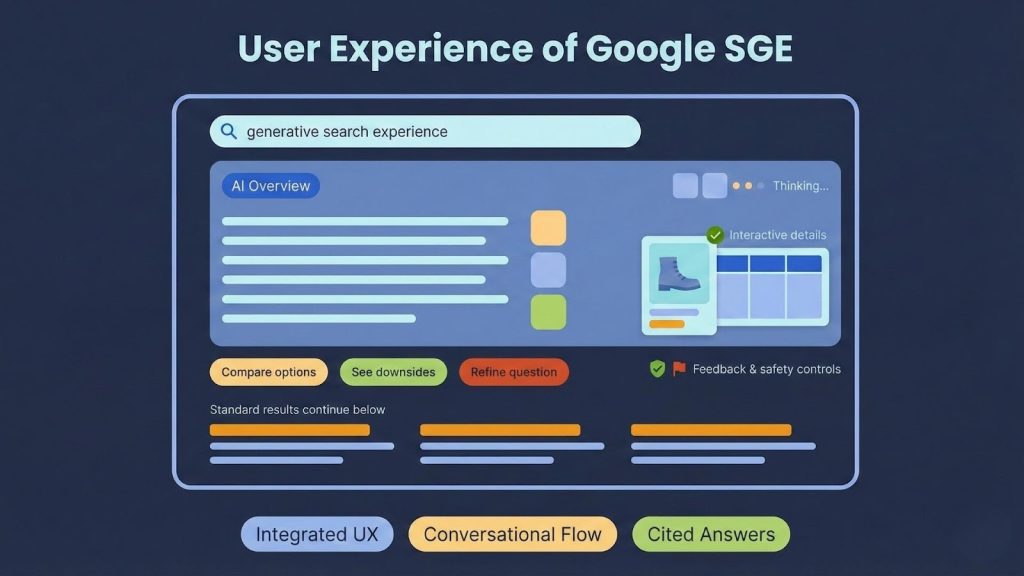

When I first used SGE, I noticed how deliberately it avoids looking like a chatbot. Instead of pulling users into a separate AI mode, it integrates seamlessly into the Google Search results page. This was a smart choice.

At the top of the results, the AI-generated Overview box appears in a shaded container, visually distinct from both ads and organic links. On the desktop, citations often appear as tiles or source cards to the side. On mobile, they’re stacked below the summary.

Critically, this doesn’t replace the traditional results. Scroll down, and you’ll still find the ten blue links, featured snippets, People Also Ask boxes, ads, and everything else. What’s changed is that now, the top of the page often includes a synthesized briefing on your query, like a personal analyst working behind the scenes.

Conversational Continuity

One of the most compelling UX innovations in SGE is its conversational follow-ups. After the AI overview, Google offers clickable suggestions like “Compare with…” or “What are the downsides?” You can also type your own follow-up. The system preserves context from your previous query, making the interaction feel fluid and coherent.

This isn’t full-blown chat; there’s no persona, no memory across sessions. But within a single search journey, you can refine and iterate in a way that mimics human conversation more than classic search ever could.

Visual and Interactive Elements

SGE isn’t limited to plain text. Depending on the query, it pulls in images, reviews, price sliders, interactive cards, and even product thumbnails. If you’re shopping for hiking boots, you may see an AI-generated explainer on key specs, followed by dynamic product listings with filters.

In some experimental modes, SGE may format information into structured lists or cards, but it does not generate diagrams.These aren’t static widgets; they’re constructed dynamically based on what the LLM thinks is most helpful.

Latency and Perceived Performance

The biggest UX trade-off is latency. Generating these summaries takes time. Most responses load in 2–5 seconds, depending on complexity. To mitigate this, Google uses shimmering placeholders to indicate something is “thinking.” Google continues tuning response time, especially after Gemini integration, highlighting how automation and AI-driven optimization have become foundational to search performance.

That said, I’ve seen users abandon the snapshot mid-load if they already find what they need in the traditional results. Google knows this. It continues tuning response time, especially after Gemini integration, which reduced latency significantly.

Safeguards in the Interface

SGE doesn’t trigger for every query. It’s disabled for ultra-sensitive topics (e.g., self-harm, financial planning, explicit health conditions) and sometimes for basic queries where a direct answer already exists.

There’s also a feedback button next to each answer. If you spot an error or a misleading claim, you can flag it immediately. This reinforces the idea that the AI is a collaborative assistant, not an oracle.

Search Generative Experiences vs Traditional Search

From Retrieval to Synthesis

The classic search engine is a retrieval machine. You ask a question, and it returns a ranked list of hyperlinks. You, the user, do the synthesis. You open tabs, compare info, and double-check sources.

SGE flips that model. It gives you the synthesis first, complete with reasoning and citations. Instead of asking, “Which sources should I read?” you’re now evaluating, “Does this summary reflect what the sources are saying?”

This rebalances the search-user relationship. Users get answers faster. But it also raises new responsibilities for Google: accuracy, neutrality, and transparency become more than ranking challenges; they’re editorial ones.

Engagement Shifts

With traditional search, engagement is typically measured by the number of clicks. More relevant results lead to more satisfied users and higher ad revenue. With SGE, success may look like fewer clicks but better answers. That’s a paradigm shift, and a controversial one.

Some publishers argue that AI overviews reduce their traffic. Google counters that links featured in SGE sometimes get more clicks than traditional listings. Both may be right. What’s clear is that the nature of search engagement is changing. Instead of being a referral engine, Google is now also a content synthesizer.

Trust and Verification

Classic search encourages verification by design. You scan URLs, skim headlines, read snippets, and decide what to trust. With SGE, the first impression is AI’s voice. If users trust it too easily, that could be dangerous.

Google tries to mitigate this with source attribution, expandable “corroboration” views, and factual language. But the reality is, when the AI speaks first, it shapes perception. The burden of critical thinking shifts slightly away from the user.

This is why I believe it’s essential that SGE continues to link out transparently and avoids editorializing. The system must remain a summary engine, not a filter for what users “should” know.

When SGE Excels, and When It Doesn’t

SGE shines when queries are broad, exploratory, or comparative:

- “Best electric cars under $40K for winter driving.”

- “How to make studying more engaging for teens with ADHD.”

- “Meal plans for families with diabetes and gluten intolerance”

These are multi-step, multi-source questions that traditional search handles poorly. SGE does it in one shot.

But for navigational queries or time-sensitive facts, classic search still wins. If I search “Stanford academic calendar,” I don’t need a summary; I need a link. And I need it instantly. Google knows this and tunes SGE to stay quiet when appropriate.

Strategic and Business Implications

A Double-Edged Sword for Google

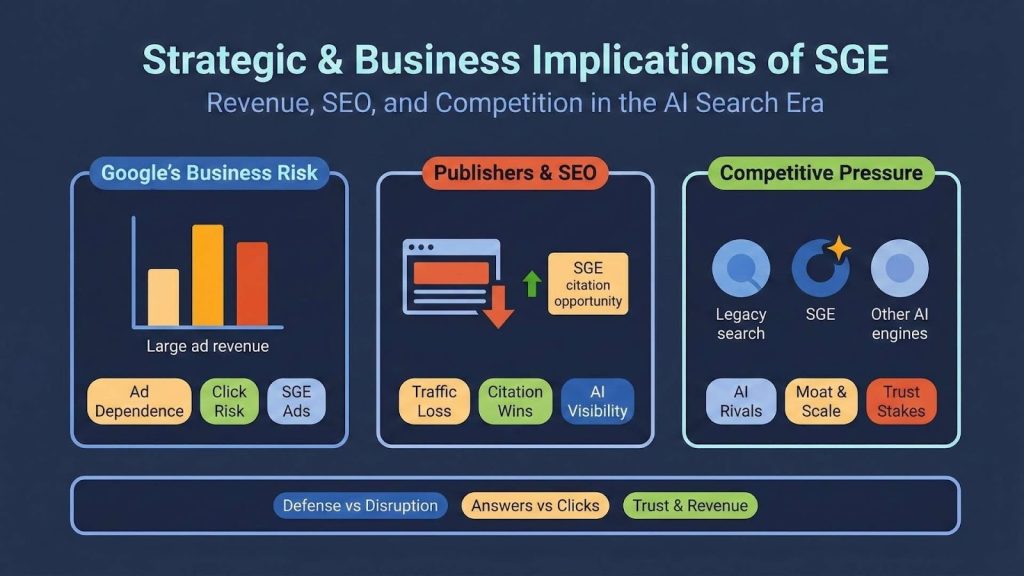

From a business standpoint, SGE is both a defense mechanism and an offensive play. On one hand, it safeguards Google’s core product, Search, from erosion by generative rivals like ChatGPT, Bing, and Perplexity. On the other hand, it introduces new capabilities that Google can monetize in ways it never could with static links.

But the stakes are high. Search is Google’s cash cow. In 2022, Google generated over $224.47 billion in advertising revenue, according to its SEC-filed 10-K. Any shift in the mechanics of user engagement risks that model. If generative answers even slightly reduce clicks on ads, the downstream effect could be enormous.

So far, Google’s approach has been conservative. SGE is incremental, not disruptive. It doesn’t replace traditional search but enhances it. Ads still appear prominently. In fact, Google has begun experimenting with ads inside SGE answers, not as banners, but contextually integrated cards and links. This is delicate territory. If done wrong, it undermines trust. But if done right, it could boost ad performance by embedding products directly into high-intent, high-context answers.

SEO and Publisher Relations

SGE has sent shockwaves through the SEO industry. Publishers and content creators are understandably concerned. If users get what they need from the AI snapshot, why would they click through?

Independent studies (Authoritas, Pew, Similarweb) report traffic losses ranging from ~30–80% for some queries, with claims of up to around 90% in the most extreme cases. The variation depends heavily on the topic, content quality, and the prominence of a site in the overview.

To its credit, Google does cite sources. In many cases, being cited in an SGE snapshot can drive more engagement than a regular blue link. But this isn’t guaranteed. And because the generative system does the synthesis, publishers no longer control how their content is framed, which is why optimizing for answer synthesis and AI-mediated visibility is becoming as important as traditional ranking signals.

Google has tried to address this by holding workshops, publishing documentation, and engaging with publishers. But the reality is clear: the economics of visibility are changing, and those who relied on organic search traffic need to adapt.

Competitive Pressure

SGE is also a response to competitive pressure. Microsoft unveiled its AI-powered Bing, integrating an OpenAI model, on February 7, 2023. While Bing’s market share didn’t skyrocket, it forced Google to act. Meanwhile, new entrants like Perplexity.ai offer generative search interfaces that are sleek, fast, and citation-heavy.

What gives Google the edge is infrastructure and distribution. It already serves billions of users across Search, Android, Chrome, and Assistant. Integrating generative features across these touchpoints is a matter of orchestration, not invention.

But the moat is not invincible. If Google fails to evolve SGE responsibly, by protecting quality, trust, and transparency, it leaves space for more agile competitors to chip away at user trust.

How to Optimize Content for Search Generative Experiences

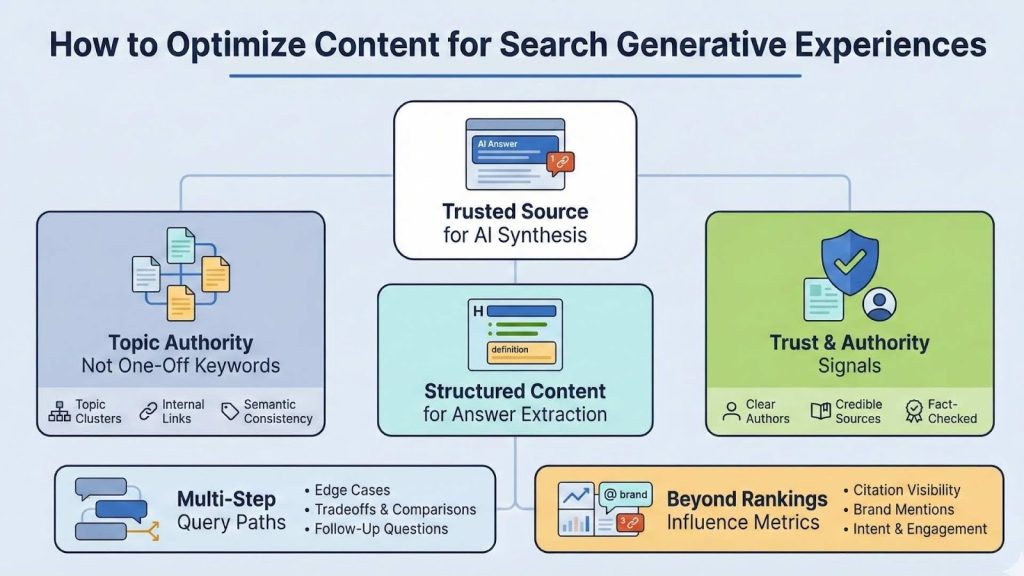

Search Generative Experiences prioritize synthesized understanding over traditional retrieval. Optimization is no longer about ranking a single page. It is about becoming a trusted source that AI systems can confidently summarize, cite, and recombine across multiple queries.

Effective optimization focuses on clarity, authority, and structure rather than keyword density.

Build Topic Authority, Not Just Keyword Coverage

Search Generative Experiences pulls from domains that demonstrate sustained expertise across a subject, not isolated pages targeting individual terms.

Content strategies should center on owning a topic through comprehensive coverage. Pages should work together to explain the concept, its mechanics, implications, and practical applications.

Sites with strong internal cohesion and consistent terminology are easier for AI systems to understand and more likely to be selected for synthesis.

Key practices include publishing topic clusters, reinforcing internal links, and maintaining semantic consistency across related content.

Structure Content for AI Synthesis and Answer Extraction

Generative search systems evaluate content at the section level, not just the page level. Clear structure directly improves how accurately content can be summarized.

Each section should serve a specific explanatory purpose. Headings should mirror how users phrase questions, and paragraphs should focus on a single idea.

Definitions, comparisons, and summaries should be explicit rather than implied. The goal is to make content easier to interpret without losing nuance.

Well structured content benefits both human readers and generative systems.

Strengthen Trust and Authority Signals

Search Generative Experiences rely heavily on trust. AI systems must decide which sources are safe to cite and reflect accurately.

Authority is reinforced through depth, accuracy, and consistency. Trust is supported by neutral language, fact based explanations, and alignment with established knowledge.

Clear authorship, credible references, and avoidance of speculative claims all increase the likelihood of inclusion in AI generated answers.

This makes editorial discipline and quality control critical at scale.

Write for Conversational, Multi Step Queries

Search Generative Experiences are designed for complex, conversational queries that involve constraints, comparisons, or follow up questions.

Content should anticipate how questions naturally expand and guide the reader through those paths. This includes addressing edge cases, tradeoffs, and related concerns.

When content aligns with real user reasoning, it becomes more useful for both people and AI systems engaged in multi step synthesis.

Measure Impact Beyond Traditional Rankings

Visibility in Search Generative Experiences does not always correlate with top rankings. Content may influence AI generated summaries without appearing as the highest ranked result.

Evaluation should include qualitative SERP analysis, brand mentions, citation visibility, and changes in audience intent and engagement.

Optimizing for generative search requires broader success metrics that reflect influence, not just clicks.

Adoption, Usage, and Performance Metrics

Gradual Rollout Strategy

SGE didn’t launch globally overnight. Google started with Search Labs in May 2023, offering early access to users in the U.S. By November, it expanded to 120+ countries and over 40 languages.

As of 2024, SGE became the default experience for logged-in users in the U.S. under the name AI Overviews. That marked a turning point. It wasn’t a beta anymore; it was the new baseline. As of mid-2025, according to Google’s own data, AI Overviews now reportedly reach 2 billion monthly users worldwide.

This measured rollout wasn’t just about scaling infrastructure. It was also about collecting feedback, testing edge cases, and refining safety mechanisms before broader exposure.

Who’s Using It, and How

Internal telemetry and user surveys suggest that younger demographics are more receptive to SGE. They see generative answers as a natural evolution of search, not a gimmick. Adoption is highest among users conducting exploratory queries, product research, health explanations, educational topics, and travel planning.

Conversely, power users who rely on precise, high-trust answers, developers, academics, and analysts still lean on traditional search, often scrolling past the snapshot.

Interestingly, Google found that SGE increased engagement for shopping queries, in part due to the interactive cards and comparison tables it generates. Users were more likely to follow links when the AI summarized why a product stood out.

Accuracy, Trust, and Safety

In its earliest phase, SGE struggled with speed and factual consistency. Answers took several seconds to load, and some included minor hallucinations. The integration of Gemini models in late 2023 addressed both issues. Search generative experiences powered by Gemini showed up to a 40% latency reduction, according to Google’s statements.

Still, the trust question remains at the forefront. That’s why Google enforces strong safety policies: SGE won’t trigger for sensitive topics (self-harm, violence, adult content, or high-risk health queries), and every answer comes with an expandable citation mechanism.

Google also fine-tuned the model’s tone to avoid first-person, emotional, or subjective phrasing. You’ll never see “I think” or “In my opinion” in an SGE response. This isn’t just a stylistic choice; it’s a philosophical stance on editorial neutrality.

The Challenges and Limitations of Search Generative Experiences

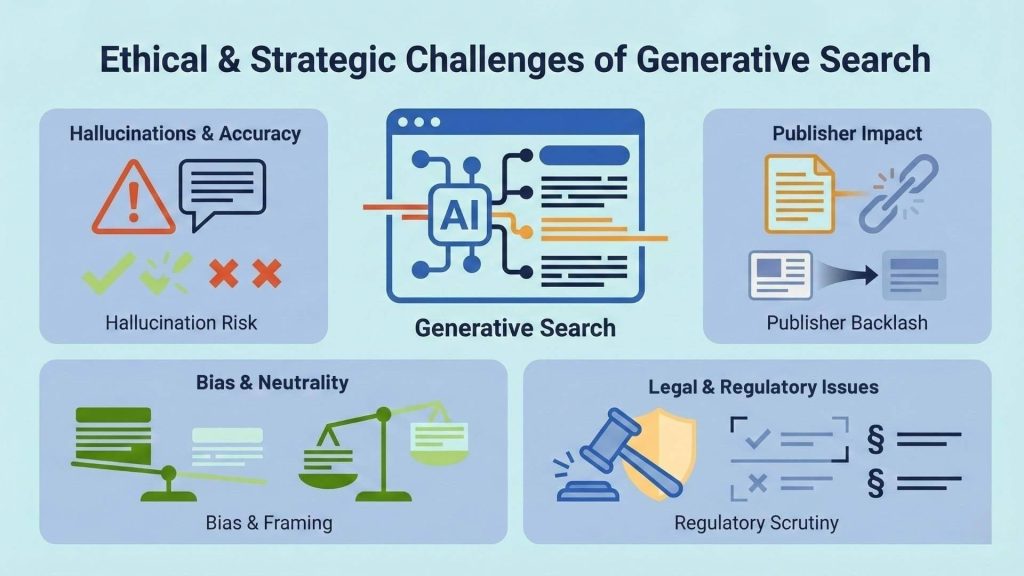

Hallucinations and Misinformation Risks

Every LLM-based system carries the risk of hallucination, and SGE is no exception. Although Google has trained its models to be grounded in verified, index-backed information, there are still instances where SGE generates confidently incorrect answers.

Some examples have gone viral, like suggesting glue in pizza or recommending unsafe health remedies. While these incidents are rare and often edge cases, they’re a reputational risk Google cannot afford. More importantly, they reflect an unresolved tension: the power of synthesis versus the risk of distortion.

To counteract this, Google relies on automated safety classifiers, manual evaluation teams, and red-teaming frameworks. But in a system that generates billions of responses, zero-error performance remains unattainable.

The Publisher Backlash

Perhaps the most vocal criticism of SGE has come from publishers and content creators. The argument is straightforward: Google is using its content to train models and generate summaries that directly compete with the source, without providing adequate compensation or attribution.

Even though SGE includes links to sources, the value chain has shifted. Before, users had to click through to learn. Now, they can get most of what they need from the summary. This undermines ad-supported journalism, blogs, educational sites, and even product review platforms.

Some publishers in Europe have taken legal action, arguing that this constitutes unfair competition and potential copyright infringement. Others are negotiating for licensing deals, akin to what OpenAI has struck with certain media companies.

Google, for its part, claims SGE drives qualified traffic to high-quality sources and has committed to expanding support for structured markup and publisher visibility in the generative experience.

Editorial Neutrality and Bias

Despite efforts to make SGE neutral, bias can still creep in. Language models are trained on large web corpora, and those reflect societal imbalances. Even subtle phrasing, what’s emphasized, omitted, or framed as pros vs. cons, can encode value judgments.

For sensitive queries (e.g., political, cultural, historical), Google often disables SGE entirely or displays traditional results. But these guardrails can be overly cautious or inconsistently applied.

Google’s stance has been to avoid persona-based replies and emotional tone. Unlike ChatGPT, SGE won’t “have an opinion” or speak in first person. That’s good design, but it doesn’t eliminate the deeper issue of latent narrative bias.

Legal and Regulatory Scrutiny

SGE is now on regulators’ radar. In Europe, the Digital Markets Act (DMA) could classify Google as a “gatekeeper” with special obligations around fairness and interoperability. If SGE is seen as favoring Google’s own properties or suppressing competitors, legal consequences could follow.

In the U.S., the FTC has signaled its interest in AI transparency and consumer protection, while states like California have proposed laws requiring companies to clearly disclose AI-generated content.

Privacy is another dimension. While SGE does not access personal data unless explicitly provided by the user, its integration with Google Accounts raises concerns about personalization at scale, especially in commercial contexts.

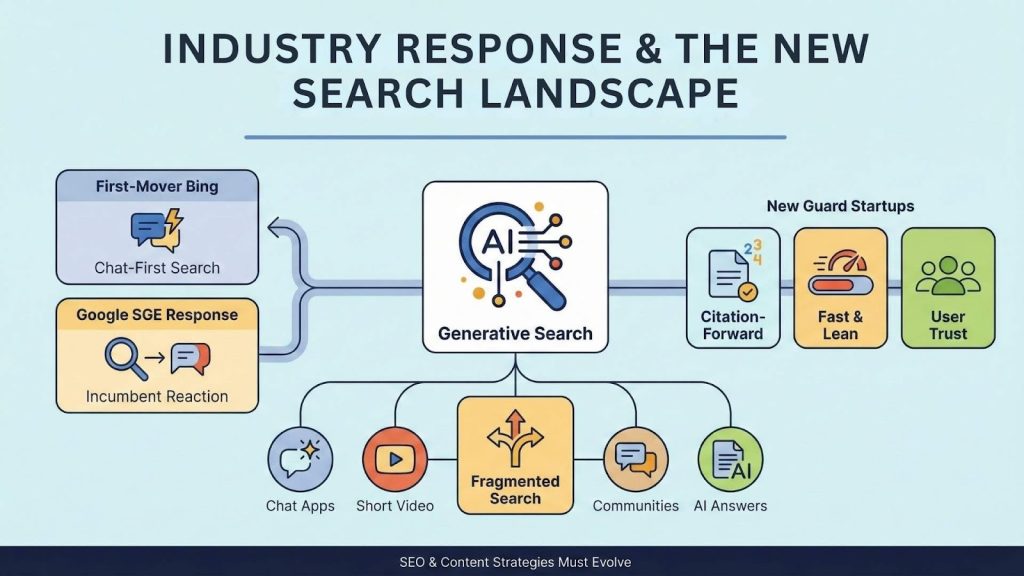

Industry Response and Competitive Landscape

Microsoft and Bing’s First-Mover Advantage

Microsoft was first out of the gate. By integrating GPT-4 into Bing in February 2023, it rebranded search as a chat-first interface. While usage didn’t explode as some expected, it forced Google to respond quickly and publicly.

Bing Chat (now Copilot in Bing) includes footnoted sources, contextual conversation, and integrations with Edge and Office products. Microsoft has emphasized productivity and research use cases, while Google has aimed for mainstream consumer search.

That strategic divergence is still playing out. But in terms of mindshare, Microsoft’s move legitimized generative search before Google could control the narrative.

Perplexity, You.com, and the New Guard

Startups like Perplexity.ai, You.com, and Andi offer cleaner, faster, and arguably more transparent generative search experiences. Perplexity in particular has gained traction among technical users, offering footnote-level sourcing, voice input, and fast performance.

These players don’t have the scale or infrastructure of Google, but they don’t carry its legacy burden either. They can experiment freely with interface design, tone, and monetization. And because they’re citation-forward, they’ve built trust with users who want quick synthesis without hallucinated fluff.

I don’t think these companies threaten Google in terms of volume, but they do create cultural pressure. They raise the bar for what generative search can feel like, and they show that users will reward quality even if it comes from smaller platforms.

Ecosystem Impacts and the Fragmentation of Search

The rise of generative search is splintering the search ecosystem. More users now start queries in ChatGPT, TikTok, Reddit, or Perplexity, depending on context. Google is still the front door to the web, but not for everything, and not for everyone.

SGE is Google’s bet to keep users in the ecosystem by offering better answers, faster. But this shift means that SEO, content strategy, and digital marketing will have to evolve. Instead of optimizing for keywords and snippets, creators must now think about how their content trains or feeds into AI systems.

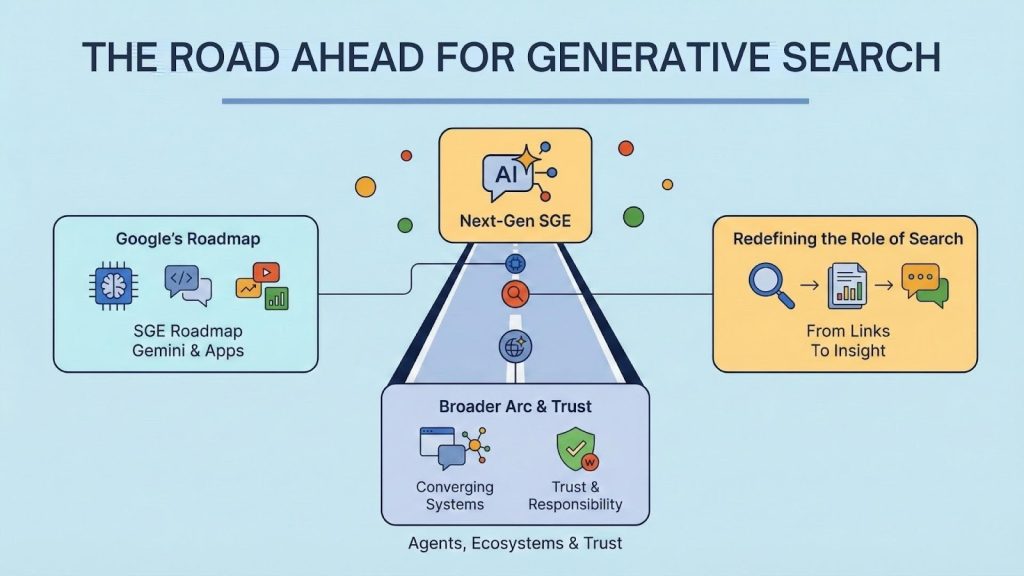

The Road Ahead: Future of SGE and Generative Search

Google’s Roadmap: Where SGE Is Headed

Google hasn’t been coy about its ambitions for SGE. In fact, its public statements, technical papers, and Search Labs iterations all point to a clear trajectory: make SGE the default experience for global search, and evolve it into a full-stack AI assistant embedded across Google’s ecosystem.

Here’s what I expect to see next:

- Gemini 1.5 and 2.0 models driving SGE with deeper multi-turn reasoning, code understanding, and longer context windows. This will let users ask more complex, layered questions and get coherent responses without losing the thread.

- Visual and interactive responses are becoming standard. Instead of just summaries, expect live tables, charts, timelines, diagrams, and even embedded tools (like calculators or simulations) generated on the fly.

- Voice-first and multimodal inputs across mobile, desktop, and ambient devices. Imagine asking a visual question by snapping a photo and asking, “What’s wrong with this leaf?” or showing a chart and asking for anomalies.

- Deeper integration with Gmail, Docs, Calendar, and Chrome. Search will become more contextual. You’ll be able to search “summarize my unread emails from the design team” or “what documents relate to the deal we’re pitching Friday?” and SGE will pull it all together, securely and privately.

- Paid tiers and enterprise access. I anticipate Google will introduce Gemini Pro/Ultra tiers integrated with SGE, giving power users enhanced capabilities and businesses a more secure, auditable AI search experience.

Redefining the Role of Search

SGE isn’t just a product; it’s a redefinition of what search engines are for. Historically, search answered the question: “Where can I find this information?” Now, it answers: “What do I need to know?” That shift changes everything.

Information retrieval becomes information synthesis. Content discovery becomes content acceleration. And user agency moves from navigation to conversation.

In that sense, SGE could transform not only the economics of the web, but also how knowledge work happens. For example:

- Students use it to build learning scaffolds, not just look up definitions.

- Developers use it to debug across forums and docs without opening ten tabs.

- Consumers use it to plan, compare, and decide without being manipulated by ad SEO tricks.

The search bar becomes a thinking partner, not just a gateway.

The Broader Arc of Generative Search

SGE is part of a much larger trend: the convergence of language models, search engines, and personal agents. Whether it’s Perplexity, ChatGPT with web browsing, or Arc’s AI-powered browser, users are moving toward systems that think before they link.

What sets Google apart, for now, is its scale, data freshness, and ecosystem reach. But trust can be lost quickly. If SGE falters in accuracy, transparency, or fairness, users have more options than ever.

That’s why Google’s emphasis on responsible development, from AI Principles to watermarking, from safety evaluations to third-party audits, isn’t just PR. It’s existential. If it wants to remain the world’s information backbone, it must earn that role all over again, this time with a generative twist.

Final Thoughts

As someone who’s tracked search and AI for years, I view SGE as the most significant transformation in Google Search since Universal Search in 2007. It doesn’t just shift the UX, it rewrites the assumptions behind how we access and interpret information.

This isn’t without cost. The open web will suffer if generative models consume without being rewarded. Publishers and educators will need new models. Legal frameworks will have to adapt. And users will need to relearn how to question what’s given to them as “the answer.”

But if done right, if SGE evolves with integrity, transparency, and a healthy dose of humility, it could usher in a new era of human-AI information collaboration. That’s not science fiction. It’s already rolling out in your search bar.

Frequently Asked Questions (FAQ)

Does Google allow publishers to opt out of SGE while remaining in organic search?

Currently, no granular opt-out exists. Publishers cannot exclude their content from SGE summaries without also removing themselves from Google Search entirely. This limitation is a central point of ongoing regulatory and industry debate.

Is SGE using website content to train Google’s foundation models?

Google distinguishes between using content for real-time answer generation versus model training. SGE responses are generated using retrieval from indexed content, but Google has not fully disclosed how web data contributes to long-term model improvements, which remains an open concern among publishers.

Does SGE change how Google evaluates E-E-A-T signals?

SGE does not replace E-E-A-T, but it likely amplifies its importance. Source selection for AI Overviews appears to favor authoritative, well-structured, and consistently cited content, rather than purely keyword-optimized pages.

How does SGE handle conflicting information across sources?

When reputable sources disagree, SGE may summarize consensus viewpoints or avoid generating an overview entirely. In some cases, it presents multiple perspectives, but it does not explicitly label disagreements as such in all scenarios.

Will AI Overviews replace featured snippets permanently?

Featured snippets are not fully deprecated, but AI Overviews increasingly supersede them for complex or multi-step queries. Over time, featured snippets may be reserved for simple, factual lookups where synthesis is unnecessary.

Is SGE personalized based on user history or behavior?

As of now, SGE responses are largely non-personalized. Google has been cautious about personalization in generative answers due to privacy, bias, and fairness risks, though limited contextual personalization may emerge in the future.

How does SGE affect long-tail keyword strategy?

SGE emphasizes the importance of targeting high-value keywords and highlights the significance of comprehensive coverage and topic authority. Pages that answer clusters of related questions are more likely to be surfaced or cited.

Can SGE be influenced by structured data or schema markup?

Schema does not directly control SGE output, but structured data improves content clarity and machine readability, which likely increases the probability of accurate citation or inclusion in AI Overviews.

Does SGE have implications for brand search and reputation management?

Yes. Since SGE synthesizes information across sources, brand narratives can be shaped by third-party content more than before. Maintaining consistent, authoritative mentions across the web becomes increasingly critical.

Could Google roll back or significantly limit SGE in the future?

A full rollback is unlikely. However, Google may selectively scale back SGE for high-risk query categories, regulatory environments, or where user trust metrics decline. SGE’s scope will continue to evolve rather than remain static.

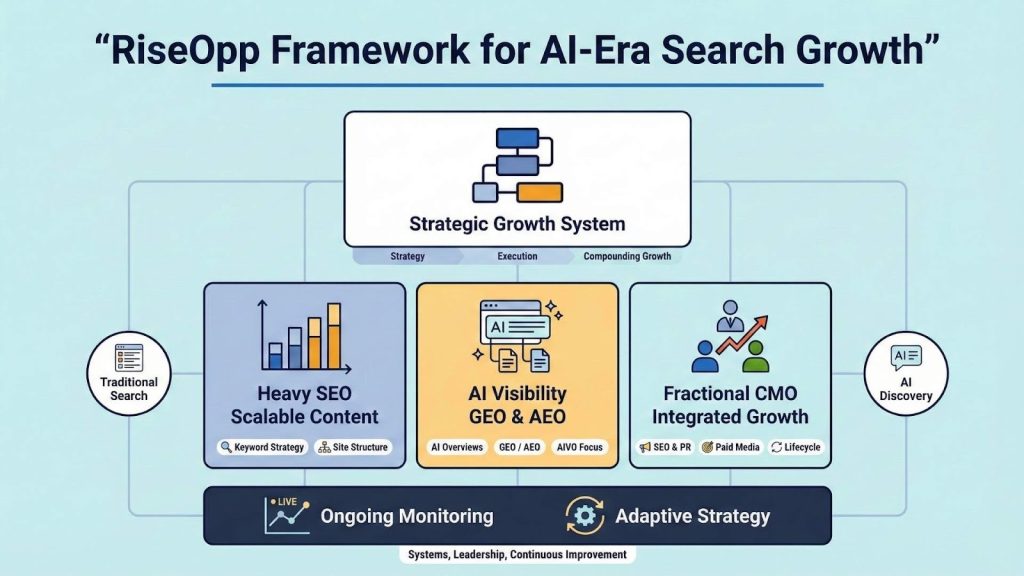

How RiseOpp Helps Brands Win in Search Generative Experiences

A content marketing strategy is only as strong as the system behind it. As search evolves toward AI-generated answers and summaries, visibility, execution, and adaptability matter more than ever.

At RiseOpp, this is exactly where we focus.

We help companies translate content strategy into durable, compounding growth. We combine Fractional CMO leadership with our proprietary Heavy SEO methodology to build content ecosystems that perform across both traditional search and emerging AI-driven discovery experiences.

Our work typically includes:

- SEO-led content and keyword strategy designed to scale across thousands of queries and buyer journeys

- Technical and structural optimization to ensure content is crawlable, indexable, and eligible for AI Overviews

- AI visibility optimization, including Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO), to support answer-first search environments

- Ongoing monitoring and competitive analysis to adapt as algorithms and user behavior change

For teams that need senior-level leadership to connect strategy to execution, our Fractional CMO service provides experienced guidance across SEO, GEO, PR, paid media, and lifecycle marketing. For organizations ready to scale their operations, our Heavy SEO framework and SEO content marketing services provide the infrastructure needed to compete long-term.

And for brands navigating the shift from traditional search to AI-mediated discovery, our AI-focused capabilities, AI Visibility Optimization, Generative Engine Optimization, and Answer Engine Optimization, help ensure your content remains visible as the rules of search continue to be rewritten.

Content marketing no longer ends at publishing. It requires leadership, systems, and continuous adaptation.

If this guide has you rethinking how your content strategy should compete in today’s search environment and tomorrow’s, the next step is building a strategy designed for both scale and AI-era relevance.

Explore how we can help, or start a conversation with our team to turn strategy into measurable growth.

Comments are closed