- Machine learning in SEO transforms ranking from rule-based optimization to adaptive, data-driven systems interpreting intent, semantics, and behavior.

- Predictive and reinforcement learning models enable automated optimization, anomaly detection, and real-time ranking adaptation across technical and content workflows.

- Integrating AI-driven SEO with marketing automation and machine learning algorithms for marketing creates closed-loop systems that forecast trends, personalize experiences, and enhance cross-channel ROI.

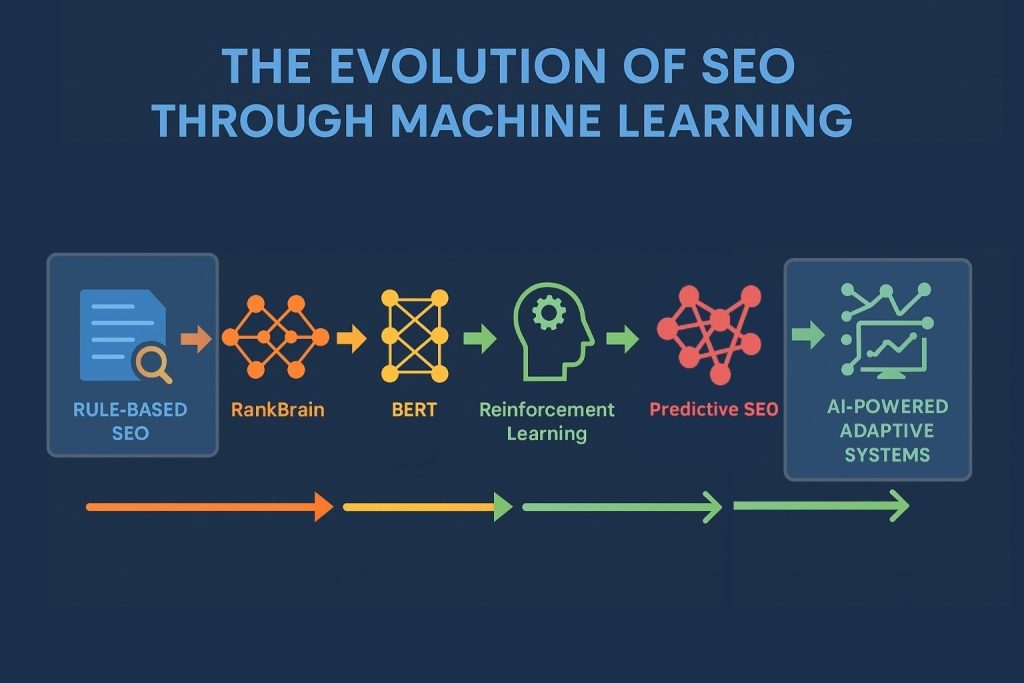

The landscape of search engine optimization (SEO) has evolved into a discipline deeply intertwined with data science. The emergence of artificial intelligence and machine learning in SEO has transformed what was once a rule-based process into a sophisticated, adaptive system guided by continuous learning and predictive modeling. Search algorithms now depend on advanced computational frameworks capable of interpreting user behavior, contextual meaning, and intent far beyond simple keyword associations.

Machine learning in SEO represents the natural evolution of how search engines and optimization strategies adapt to the vast flow of user-generated data. As algorithms learn and evolve, they improve their ability to deliver contextually accurate results, reward relevant content, and penalize manipulation. This transformation has forced SEO professionals to transition from mechanical tactics to analytical models, leveraging data-driven insights rather than guesswork.

Search engines are no longer static systems bound by rigid rules; they are self-optimizing ecosystems where learning models evolve through billions of interactions. For professionals seeking better rankings, intelligent algorithm SEO insights provide practical guidance for aligning with evolving AI-driven standards. Mastery requires a technical grasp of these concepts, translating data interpretation into actionable strategies that balance analytical precision with creative execution.

The Algorithmic Evolution: From Static Rules to Learning Systems

From PageRank to Contextual Intelligence

In the early stages of SEO, ranking success was largely determined by keyword frequency, meta tag optimization, and backlink structures. PageRank, Google’s foundational model, measured the importance of a page based on link quantity and authority. This static, rule-based structure provided predictability but lacked the sophistication to interpret intent or content quality.

However, user interaction with search results has evolved dramatically. A 2025 study by Search Engine Land analyzing more than 20,000 queries found that when an AI Overview appears in Google’s results, the top organic link receives significantly fewer clicks than usual and in many cases, the second organic link now outperforms the first in click-through rate (CTR). This shift underscores how machine-learning-powered interfaces are reshaping user behavior and redefining what “ranking first” really means in the modern SERP.

Machine learning revolutionized this paradigm. The launch of RankBrain in 2015 marked a turning point, allowing Google to process ambiguous queries and infer meaning using vector-based semantics rather than direct keyword matching. The result was a more fluid and dynamic search landscape where algorithmic learning, rather than predefined formulas, guided search results.

Deep Learning SEO and Semantic Understanding

Deep learning SEO techniques introduced neural networks capable of understanding natural language through contextual embeddings. With models like BERT (Bidirectional Encoder Representations from Transformers) and MUM (Multitask Unified Model), search engines began to comprehend how users think, not just what they type.

These advancements allowed algorithms to process linguistic nuances such as sentiment, topic relationships, and contextual relevance. Instead of ranking a page purely on keyword repetition, the systems began rewarding semantic alignment and intent fulfillment. This evolution introduced a new layer of cutting-edge algorithm SEO factors, which now include contextual depth, entity relationships, and behavioral feedback loops. This shift underscored the growing importance of data interpretation and intelligent analysis within SEO workflows.

The Rise of Intelligent Algorithm SEO Concepts

Modern SEO algorithms operate with intelligence akin to adaptive reasoning; they evaluate content quality, entity relationships, and behavioural signals such as click‐through rate (CTR) and dwell time. These intelligent algorithm SEO concepts shift optimisation away from purely manipulating visibility toward aligning content with genuine user needs.

According to Exploding Topics, well over 86% of SEO professionals report they have integrated AI tools or platforms into their strategies, signalling that machine-learning-driven optimisation is already standard practice.

At the same time, new metrics reflect how user behaviour and search engine mechanics are changing: in the U.S., more than 58% of Google searches now end without clicking through to a website, suggesting that visibility alone is no longer sufficient.

For SEO professionals this means mastering not just traditional SEO principles but also machine-learning search logic, data analytics, and the interface between language and algorithmic reasoning.

Advanced Machine Learning Architectures Driving SEO Evolution

Transformers and Semantic Understanding

Modern search engines now rely on transformer-based architectures such as BERT, MUM, and Gemini to interpret search intent and context. According to McKinsey & Company’s 2025 State of AI report, 78% of organizations use AI in at least one business function a clear sign that machine learning–driven SEO has become mainstream.

These models shifted SEO from keyword matching to true semantic understanding. Instead of focusing on single terms, they analyze context and relationships between words, pushing SEO teams to optimize for meaning and topical relevance.

From a technical standpoint, transformer models use self-attention mechanisms that evaluate the importance of each word relative to others in a sequence. When Google interprets a query like “best running shoes for overpronation,” the model identifies user intent, evaluates context (running style, condition, product reviews), and prioritizes semantically relevant content. Optimizing for such systems requires:

- Building content clusters around entity relationships rather than keyword density.

- Structuring internal linking to reinforce contextual hierarchies.

- Training or fine-tuning smaller NLP models internally to identify semantic gaps within your own site.

Reinforcement Learning and Ranking Adaptation

Reinforcement learning (RL) models are responsible for how search algorithms continuously improve rankings through feedback. When users click, dwell, or bounce, these signals train ranking models to reward relevance and satisfaction. Google’s RankBrain and subsequent systems leverage this logic to reweight ranking factors dynamically.

In the SEO context, RL can be applied to simulate user interaction patterns. For example:

- Predicting which snippets drive the highest click-through rate (CTR).

- Identifying which structural changes to metadata improve engagement.

- Optimizing internal linking or CTA placement through multi-armed bandit experimentation.

Agencies and advanced teams often build internal RL frameworks to test hypothesis-driven changes at scale, reducing dependency on long test cycles.

Graph Neural Networks and Entity Relationships

Graph Neural Networks (GNNs) represent the next generation of ML for SEO. They model data as nodes (entities) and edges (relationships), closely mirroring how Google’s Knowledge Graph structures information.

GNNs enable:

- Entity extraction and connection mapping identify how concepts within your content relate semantically.

- Authority propagation assesses how link equity flows between entities.

- Topic centrality detection: discovering which subtopics act as semantic anchors within your domain.

Integrating GNN-based analysis into SEO enables far more precise topical authority mapping and link graph modeling capabilities far beyond traditional keyword or backlink analysis.

Generative Models and Synthetic Data in SEO

Generative Adversarial Networks (GANs) and large generative models allow for the creation of synthetic datasets used to train or validate SEO algorithms. For instance, synthetic SERP data can simulate ranking fluctuations under different on-page configurations, enabling predictive optimization before deployment.

These models also assist in:

- Generating synthetic long-tail keyword queries.

- Creating data-augmented content templates to train intent classification systems.

- Testing structured data and schema changes in controlled, simulated environments.

Such approaches demand rigorous model validation and version control to ensure they enhance, rather than distort, actual SEO performance outcomes.

Machine Learning as the Core of Modern SEO Architecture

How Search Engines Learn

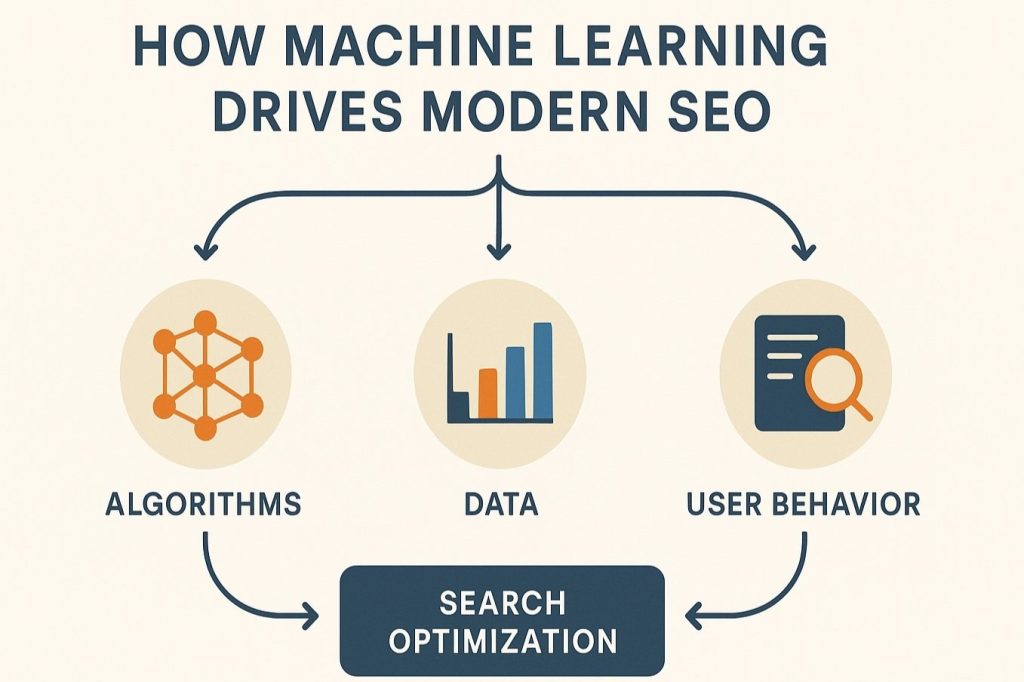

Search engines rely heavily on supervised, unsupervised, and reinforcement learning to improve ranking accuracy. Each learning model plays a specific role:

- Supervised Learning: Used for ranking signals such as click prediction and content quality scoring. Algorithms train on labeled data to learn patterns of relevance and engagement.

- Unsupervised Learning: Deployed in clustering and categorizing web content, identifying thematic groupings without predefined labels.

- Reinforcement Learning: Focused on improving decision-making through continuous feedback loops. The system rewards outcomes that improve user satisfaction and penalizes poor results.

This learning process transforms search engines into evolving ecosystems capable of self-improvement. Each query, click, and interaction contributes to a training cycle that refines future search outcomes.

Search Engine Optimization Machine Learning in Action

Search engine optimization machine learning is not just theoretical. It powers how search results are ranked and adjusted dynamically based on user feedback. Engagement data such as time on page, pogo-sticking behavior, and conversion patterns act as signals that teach the model what constitutes a valuable result. This continuous feedback loop allows algorithms to fine-tune rankings with precision that manual optimization could never achieve.

The Machine Learning Ranking Factor

Machine learning ranking factors encompass a combination of traditional and behavioral metrics interpreted through predictive modeling. These include:

- Topical relevance and entity relationships.

- User engagement and satisfaction metrics.

- Semantic and contextual coherence.

- Site trust and authority as measured through model confidence scores.

Understanding these factors allows SEO professionals to anticipate algorithmic preferences, rather than reacting to them. Success now depends on aligning strategy with the logic of adaptive models.

Data-Driven SEO Strategy Using Machine Learning

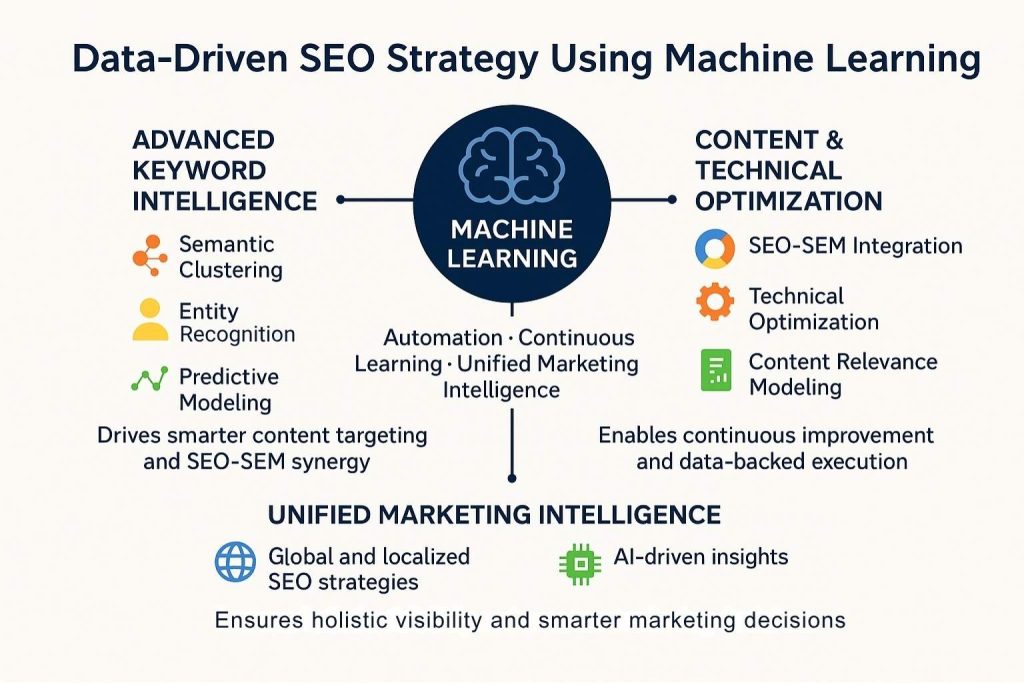

Advanced Keyword Intelligence

Keyword research has moved beyond static lists and search volume metrics. With machine learning keywords, optimization becomes a process of discovering semantic networks and emerging intent clusters. Machine learning algorithms can analyze a large corpora of search data to identify latent patterns and related topics that humans might overlook.

This approach enables SEO professionals to:

- Group related queries through semantic clustering.

- Identify high-value keywords that connect to specific user intents.

- Forecast search trends based on predictive modeling.

The result is a keyword strategy that evolves with market behavior, ensuring long-term relevance and adaptability.

SEO Content Analysis Using Machine Learning

Modern SEO content analysis using machine learning and NLP techniques has become far more sophisticated, allowing marketers to assess semantic quality, topical completeness, and user intent alignment more precisely than ever. SEO content analysis using machine learning tools examines not only surface-level keyword use but also semantic depth, topical completeness, sentiment, and structure.

Key aspects of ML-driven content analysis include:

- Entity Recognition: Identifying key concepts, people, or places that establish topical authority.

- Contextual Alignment: Evaluating how well content aligns with query intent.

- Readability and Engagement: Predicting user interaction based on linguistic features.

- Competitive Benchmarking: Comparing content quality and topical density against leading results.

By leveraging these techniques, SEO professionals can identify content gaps, strengthen semantic relationships, and ensure that content resonates both with users and algorithms.

Technical Optimization Through ML SEO

Technical SEO now depends heavily on data modeling and automation. Machine learning can detect anomalies and inefficiencies that would be nearly impossible to identify manually.

Applications include:

- Predictive crawl budget management and indexing prioritization.

- Log file analysis to detect crawler behavior and site performance issues.

- Reinforcement learning for internal link optimization and site structure enhancement.

ML SEO systems can also automate the identification of duplicate content, optimize meta structures, and predict which pages are most likely to gain ranking improvements. These methods reduce waste and improve scalability, freeing experts to focus on strategic decisions. In practice, ML SEO tools automate repetitive optimization tasks, from meta-tag generation to internal linking adjustments, ensuring continuous alignment with search engine learning models.

Machine Learning in Search Engine Marketing Integration

Machine learning in search engine marketing (SEM) no longer merely complements SEO; it unifies paid and organic optimization into a single intelligent system. According to SEO.com (2025), the global AI in marketing industry is valued at approximately US $47.3 billion and is projected to exceed US $107 billion by 2028, reflecting a compound annual growth rate of nearly 36 percent. This surge underscores how machine-learning-driven automation has shifted from experimentation to necessity.

By integrating shared learning models across SEO and SEM, organizations can synchronize keyword bidding, content optimization, and audience targeting within the same predictive framework improving efficiency, performance, and return on investment across all digital channels.

This unified approach supports:

- Predictive conversion modeling based on shared behavioral data.

- Dynamic content alignment between paid and organic channels.

- Enhanced attribution analysis for better marketing ROI assessment.

When SEO and SEM data feed into the same learning model, both channels benefit from mutual reinforcement and better decision-making accuracy.

Building a Machine Learning-Enabled SEO Stack

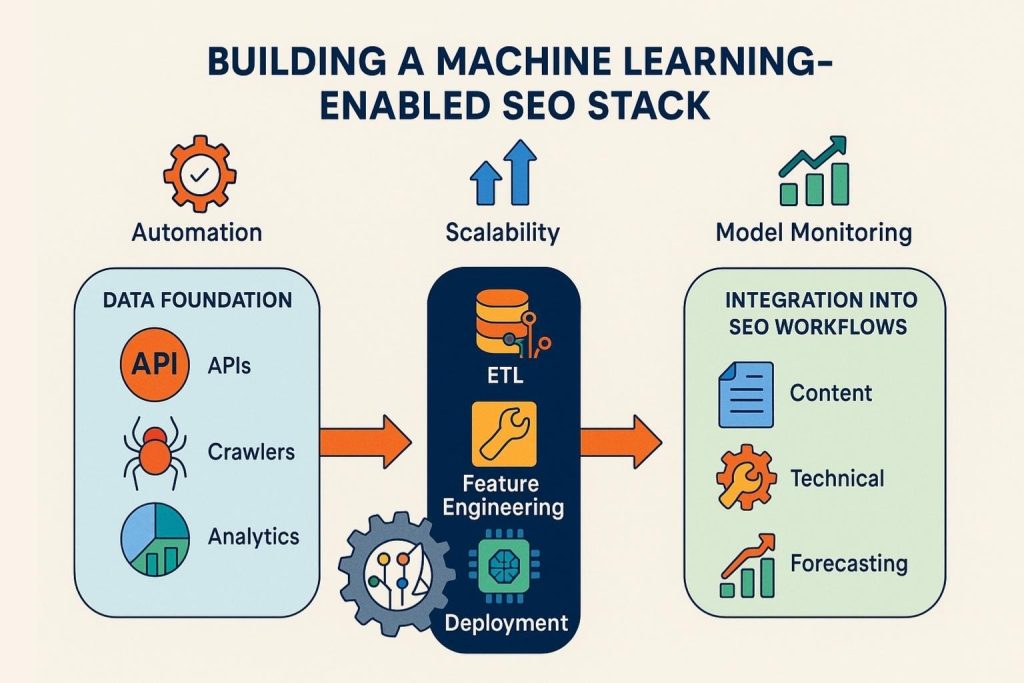

Establishing the Data Foundation

A successful machine learning SEO strategy begins with the right data infrastructure. Every machine learning initiative relies on three critical inputs: clean data, structured features, and feedback loops.

For SEO, this typically involves aggregating data from:

- Search Console and Analytics APIs for impressions, clicks, CTR, and ranking distributions.

- Crawling data from platforms like Screaming Frog, Sitebulb, or custom Python crawlers.

- Third-party metrics such as backlink data, keyword trends, or SERP volatility indexes.

- Content datasets including word embeddings, entity metadata, and topic relevance scores.

These datasets must be unified into a warehouse or data lake such as BigQuery, Snowflake, or AWS S3. Proper data normalization and versioning are essential, since machine learning models depend on feature stability across training and deployment phases.

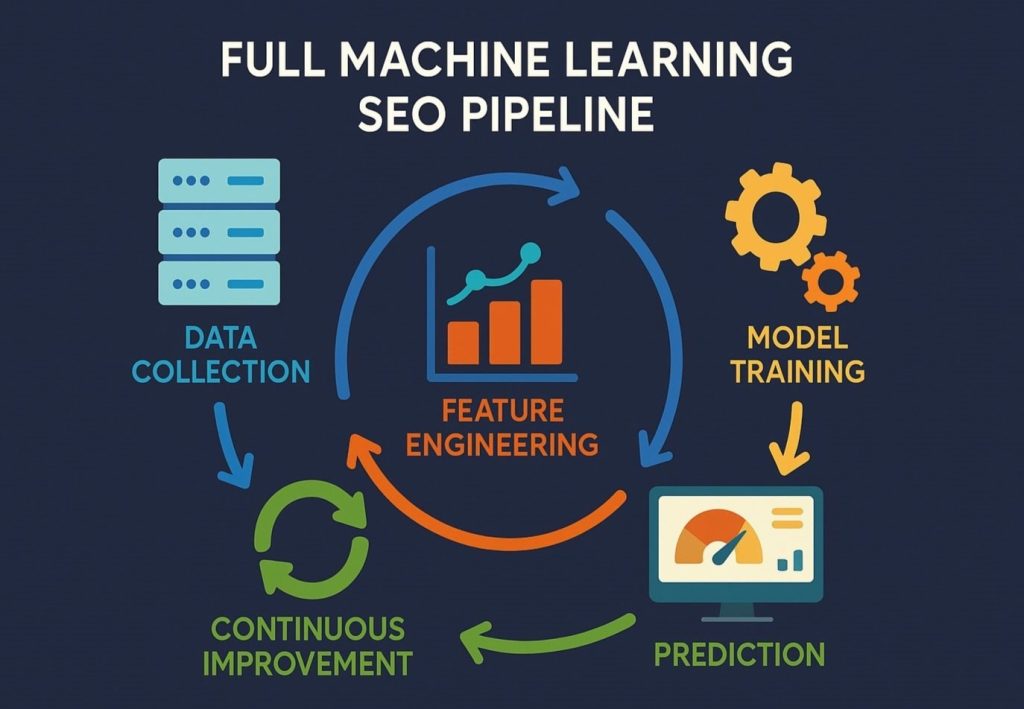

Designing the Machine Learning Pipeline

Once the data foundation is established, the next layer is automation through a scalable ML pipeline. A production-grade SEO ML pipeline generally includes:

- Data ingestion and transformation – cleaning and merging datasets using ETL workflows in Airflow or dbt.

- Feature engineering – creating new input variables such as:

- Click decay rates and CTR-normalized rankings.

- Weighted topic density per URL.

- Intent score derived from semantic embeddings.

- Model training and testing – using frameworks like Scikit-learn, TensorFlow, or PyTorch to train supervised models for classification (intent detection), regression (ranking prediction), or clustering (topic grouping).

- Deployment and feedback integration – deploying trained models via APIs or cloud endpoints (Vertex AI, SageMaker) and capturing live search performance data to refine models.

This iterative cycle transforms traditional SEO into a data-driven optimization system rather than a one-off project.

Integrating ML Models into SEO Workflows

Machine learning outputs are only as valuable as their integration into the daily SEO process. Integration can occur at multiple levels:

- Content workflows: embedding intent classification models into CMS workflows to guide writers toward optimal keyword-targeting decisions.

- Technical SEO monitoring: using anomaly detection models to alert teams to indexation or crawl rate issues before they impact rankings.

- Link evaluation: training ML models to classify inbound links by quality, authority, and topical relevance.

- Forecasting dashboards: combining model outputs with visualization tools like Looker Studio or Power BI to interpret trends and predict future movements.

By merging machine learning systems with standard SEO tooling, teams create a feedback loop between algorithmic insights and human decision-making.

Automation and Scalability

Scalability separates experimental SEO teams from those using machine learning as a strategic differentiator. With the right stack, even highly complex processes become automated and repeatable.

Examples include:

- Automated keyword clustering pipelines that continuously group new queries by semantic similarity.

- Dynamic content gap identification that flags underperforming topics.

- Automated internal linking optimization based on semantic graph modeling.

- Continuous log analysis for crawl behavior prediction.

Implementing these workflows requires coordination between SEO specialists, data engineers, and developers. In many organizations, this is where collaboration with an experienced marketing technology partner or agency becomes valuable. Agencies can provide data infrastructure, modeling expertise, and scalable execution helping internal teams move beyond the experimental phase and into operational maturity.

Model Monitoring and Continuous Improvement

The final element of a machine learning-enabled SEO stack is continuous monitoring. Models can drift as search algorithms, user behavior, or content strategies evolve.

Effective monitoring should track:

- Model accuracy: comparing predicted vs. observed ranking shifts.

- Feature drift: identifying when key variables (e.g., CTR, impressions) behave differently over time.

- Performance variance: segmenting model effectiveness by keyword type, geography, or device.

Feedback from real-world performance should inform periodic retraining cycles. Over time, this ensures that your SEO system learns and adapts just as search engines do.

Machine Learning-Driven Predictive SEO Forecasting

The Rationale for Predictive SEO Models

Traditional SEO reporting is reactive, focusing on explaining what already happened traffic fluctuations, ranking losses, or visibility shifts. Machine learning enables a paradigm shift: forecasting SEO outcomes before they occur. Predictive SEO uses historical and real-time data to anticipate how algorithmic updates, content changes, and competitor activities might influence performance.

These models allow professionals to:

- Estimate the impact of content optimizations or link campaigns before launch.

- Forecast traffic growth or decline under various ranking scenarios.

- Quantify the sensitivity of rankings to specific variables such as crawl rate, Core Web Vitals, or topical depth.

Predictive SEO turns optimization into an evidence-based forecasting discipline, improving planning accuracy and resource allocation.

Key Data Inputs for Predictive Modeling

To construct effective forecasting models, the right mix of data sources and features must be incorporated. Core variables typically include:

- Historical ranking and CTR data from Google Search Console.

- Seasonality metrics, such as month-over-month query trends.

- On-page metrics: title quality scores, semantic topic density, structured data completeness.

- Off-page metrics: domain authority, backlink velocity, link trust flow.

- Engagement signals: session duration, bounce rates, and repeat visits.

- External volatility data: SERP volatility indexes, algorithm update timestamps, and competitor performance signals.

Each variable contributes to a feature set that feeds machine learning models designed to recognize performance patterns. Predictive models are becoming core to SEO and machine learning convergence, demonstrating how adaptive intelligence continuously redefines ranking strategies.

Choosing the Right Predictive Models

The model type depends on the prediction objective. The following frameworks are most effective for SEO forecasting:

- Regression models (Linear, Random Forest, XGBoost): Used for predicting numeric outcomes such as traffic volume, ranking position, or CTR.

- Time series models (ARIMA, Prophet, LSTM): Best suited for forecasting long-term organic trends while accounting for seasonality and volatility.

- Classification models: Predict categorical outcomes such as “rank improvement,” “no change,” or “rank drop.”

- Ensemble models: Combine multiple algorithms for more stable performance and reduce overfitting to temporary fluctuations.

An advanced approach involves training recurrent neural networks (RNNs) or LSTM networks on ranking histories to learn temporal dependencies. These models excel at capturing cyclical behaviors such as seasonal traffic spikes or gradual keyword performance shifts that traditional analytics miss.

Building Predictive SEO Dashboards

The power of predictive modeling comes alive when it’s visualized in real time. Integrating model outputs into dashboards allows decision-makers to evaluate multiple potential futures for their SEO strategies.

An effective dashboard typically includes:

- Predicted vs. actual visibility curves for each major keyword cluster.

- Confidence intervals representing uncertainty bands around each forecast.

- Scenario controls that allow marketers to simulate different strategies (e.g., increasing content cadence, improving Core Web Vitals).

- Alerts for predicted anomalies such as unexpected ranking volatility or traffic loss.

By combining these elements, SEO teams move from static reporting toward dynamic optimization forecasting.

Practical Use Cases of Predictive SEO

Machine learning forecasting models can be applied across a range of practical contexts:

- Pre-launch optimization: Predict how new content will perform based on existing patterns in the same topic cluster.

- Budget planning: Forecast the ROI of link acquisition campaigns or content investments.

- Algorithm resilience testing: Simulate the potential impact of hypothetical Google updates on different ranking factors.

- Trend prediction: Identify emerging topics by forecasting shifts in keyword search volume or query clusters.

These capabilities provide a strategic edge by transforming reactive decision-making into proactive execution.

The Value of Predictive Insights for Enterprise SEO

At enterprise scale, predictive SEO becomes a strategic asset. It supports forecasting models that guide cross-functional decision-making not only within SEO teams but across content strategy, product planning, and marketing operations. Predictive insights help determine:

- Which markets or verticals will experience the most organic growth.

- Which technical issues could cause performance degradation in advance.

- Where incremental content or technical investments yield the greatest impact.

For organizations managing hundreds or thousands of pages, predictive machine learning can reduce wasted efforts and focus initiatives where they will produce measurable gains.

The Role of Specialized Expertise

While predictive SEO models can be built internally, deploying them effectively requires expertise across data science, infrastructure, and SEO strategy. Many teams choose to collaborate with specialized partners or agencies that can operationalize these systems, connecting data pipelines, model monitoring, and actionable forecasting workflows. Collaboration ensures that technical sophistication aligns with real business outcomes.

Integration of Machine Learning with Technical SEO and Automation

Automating Crawl Analysis and Log File Insights

Crawl efficiency has become a critical ranking factor, especially for large-scale websites with complex structures. Traditional log analysis can identify crawl waste or indexation issues, but machine learning takes this process several steps further by learning from historical crawl patterns.

Using supervised and unsupervised ML models, teams can:

- Detects crawl budget inefficiencies, such as low-value pages consuming disproportionate bot activity.

- Predict crawl anomalies and alert technical teams before they escalate.

- Identify indexation drop risks through anomaly detection on server response codes and crawl depth.

- Segment URLs by crawl frequency, engagement metrics, and internal link density to prioritize optimization.

ML-powered log analysis tools continuously learn from crawl frequency data and behavior, adapting to changes in site architecture or content depth. This leads to more efficient resource allocation, better crawl prioritization, and faster indexation of high-value content.

Automated Technical Diagnostics and Anomaly Detection

Machine learning can continuously monitor technical health signals across massive data streams far beyond what manual audits or standard crawlers can achieve.

Applications include:

- Performance anomaly detection: identifying when Core Web Vitals deviate from normal patterns.

- Canonical and duplication prediction: using NLP-based similarity metrics to identify near-duplicate or conflicting canonical tags.

- Redirect chain prediction: detecting risk areas for redirect loops or orphaned pages using graph algorithms.

- Indexation modeling: predicting which URLs are most likely to be indexed or dropped, based on metadata patterns and internal link signals.

By training models on historical performance data, teams can automate the detection of irregularities and flag emerging issues in real time, turning reactive audits into predictive maintenance systems.

Structured Data Validation and Schema Optimization

Structured data has a direct impact on how search engines interpret and display content. Yet, ensuring consistent schema implementation across hundreds of templates is a challenge. Machine learning automates schema validation and even recommends enhancements.

With ML-based schema validation:

- NLP models can scan page content to verify that schema properties align with on-page entities.

- Clustering algorithms can detect missing or inconsistent schema across templates.

- Predictive scoring models estimate the impact of schema types (e.g., FAQ, Product, HowTo) on CTR or snippet visibility.

This helps prioritize which structured data changes will yield the highest organic visibility gains. Over time, these models learn which markup variations produce the best results, creating a self-optimizing schema framework.

Internal Link Optimization with Graph Learning

Internal linking directly influences how authority and crawl priority propagate across a domain. Machine learning can model an internal link structure as a directed graph, where pages are nodes and hyperlinks are edges. By analyzing this graph, ML algorithms can:

- Identify underlinked pages that lack sufficient authority flow.

- Recommend new internal link opportunities based on semantic similarity between pages.

- Calculate link efficiency ratios to measure how effectively link equity is distributed.

- Optimize link architecture to improve PageRank flow and topical reinforcement.

Graph-based reinforcement systems can even simulate the impact of adding or removing internal links before implementation, preventing SEO regressions and accelerating optimization cycles.

Technical Health Scoring with Feature Engineering

Machine learning models can aggregate multiple technical metrics into composite “health scores.” By training regression or classification models on historical ranking and traffic data, it’s possible to quantify how much each technical factor contributes to performance.

Example feature inputs include:

- Mobile usability metrics.

- Structured data completeness percentage.

- Internal link depth.

- Page speed scores.

- Server response consistency.

The resulting model generates a dynamic technical SEO health score that updates as site conditions evolve. This allows teams to prioritize fixes that yield the greatest performance returns instead of relying on static checklists.

Automation Pipelines for Continuous Optimization

Machine learning transforms technical SEO into a continuous, automated process. Integrating ML systems with DevOps pipelines enables real-time diagnostics and automated alerts during deployment.

Common automation workflows include:

- Running ML validation checks automatically during content or code releases.

- Triggering re-crawls when the model detects significant template or content changes.

- Generating daily anomaly reports to highlight deviations in indexation or ranking distributions.

At this level of sophistication, the SEO system becomes self-monitoring and partially self-correcting, reducing manual intervention and improving stability across iterations.

Scaling Technical SEO Through Intelligent Automation

As ML-driven systems evolve, they reduce dependency on manual audits and allow technical SEO specialists to focus on strategy and architecture. Intelligent automation delivers three key outcomes:

- Scalability: handling millions of URLs and signals with minimal human input.

- Consistency: ensuring uniform quality control across multiple environments.

- Speed: detecting and addressing issues faster than human-led review cycles.

However, building and maintaining these pipelines requires technical acumen, data infrastructure, and integration capabilities. This is where specialized SEO technology partners or agencies provide value, offering the engineering depth and cross-domain expertise necessary to scale intelligent automation reliably.

Data Governance and Model Performance in SEO Systems

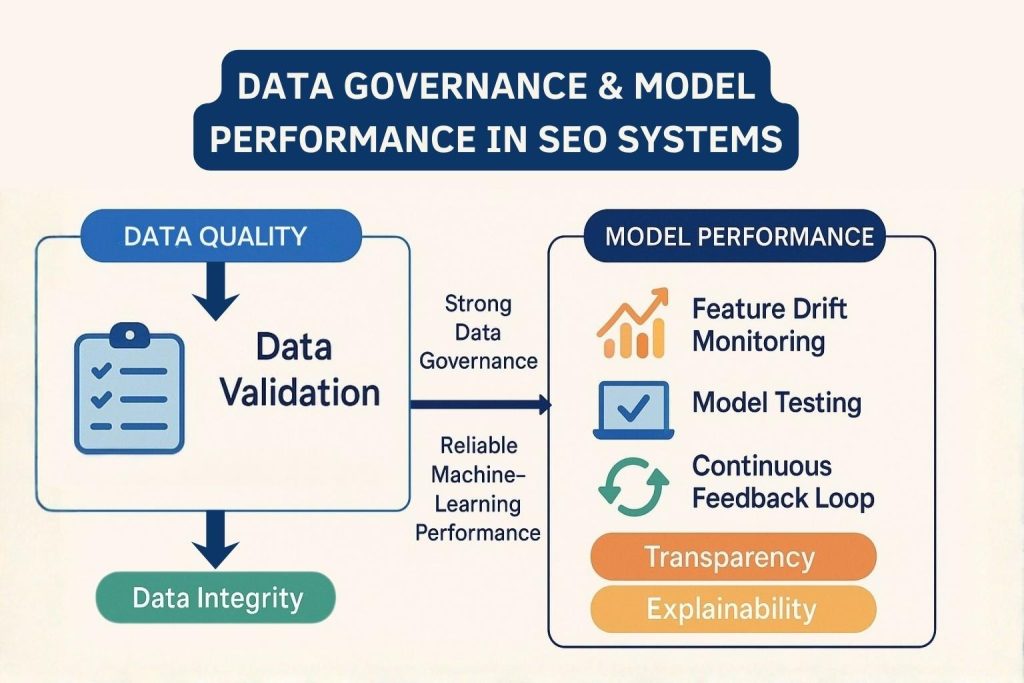

Ensuring Data Quality and Integrity

Machine learning in SEO depends entirely on the quality of its data. A flawed dataset produces biased, unstable, or misleading models that can derail optimization strategies. Robust data governance frameworks ensure the data feeding into models remains accurate, consistent, and representative of real-world search behavior.

Key principles include:

- Data validation pipelines: Automating checks for missing, duplicate, or corrupted records from sources such as Google Search Console, Analytics, or crawl logs.

- Normalization procedures: Converting different metrics, CTR, impressions, and dwell time into standardized scales for consistent model training.

- Outlier detection: Using statistical or clustering models to identify anomalies (e.g., sudden traffic spikes from bot traffic or seasonal campaigns).

- Version control for datasets: Maintaining a timestamped lineage of all feature sets to ensure reproducibility and auditability.

Clean, structured data forms the foundation for any credible SEO machine learning initiative.

Feature Stability and Model Reliability

Search behavior, site architecture, and algorithm dynamics evolve constantly. As a result, the relationships between features and outcomes shift over time. This phenomenon, known as feature drift, poses one of the biggest risks in ML-driven SEO.

To manage this effectively:

- Continuously monitor feature importance and correlation coefficients for significant deviations.

- Use drift detection algorithms to flag when models’ predictive accuracy begins to decline.

- Implement scheduled retraining cycles aligned with major algorithm updates or quarterly business cycles.

- Apply ensemble models that adjust weights dynamically to mitigate performance degradation.

A stable SEO machine learning system recognizes when its own assumptions no longer hold true and triggers recalibration automatically.

Model Validation and Cross-Checking

Before deploying a model into a live SEO workflow, validation is essential. Validation ensures predictions align with reality and that the system remains transparent to human interpreters.

Professional-grade validation techniques include:

- Cross-validation: Splitting historical SEO data into training and testing subsets to measure prediction reliability.

- Backtesting: Applying trained models to older datasets to verify their ability to predict known outcomes.

- Explainability tools: Using interpretability frameworks such as SHAP or LIME to identify which features most strongly influence ranking predictions.

- Error analysis: Quantifying false positives and negatives to improve threshold tuning.

This process builds confidence that model outputs can be trusted in high-stakes SEO decision-making environments.

Monitoring and Continuous Feedback

Model monitoring is not a one-time setup; it is an ongoing process integrated into SEO operations. Each prediction or recommendation from an ML system should feed back into a continuous learning loop.

Effective monitoring practices include:

- Tracking prediction error metrics (MAE, RMSE, or R²) across different keyword segments.

- Comparing predicted ranking trends with actual SERP movement after algorithm rollouts.

- Logging and versioning every model deployment to maintain an audit trail.

- Establishing performance thresholds that trigger retraining or human review when deviations exceed set limits.

This real-time feedback loop ensures that SEO optimization remains aligned with actual search engine behavior.

Transparency and Interpretability

Machine learning SEO strategies are only as effective as they are explainable. When models drive ranking decisions, it becomes vital for SEO professionals to understand why those decisions occur.

Interpretability techniques help:

- Identify the key ranking signals contributing to performance.

- Communicate model logic to stakeholders and clients without overcomplicating the technical details.

- Prevent overfitting to misleading patterns (such as temporary traffic surges).

- Support compliance with data governance and ethical reporting standards.

The goal is to create models that inform, not obscure. A transparent SEO machine learning framework enhances credibility and supports data-driven decision-making across organizations.

Establishing Governance for Continuous Learning

Finally, a well-defined governance model ensures consistency, accountability, and scalability as SEO systems evolve. Governance combines documentation, workflow management, and periodic audits to maintain operational control.

Core elements include:

- Standardized documentation for model design, data sources, and evaluation metrics.

- Access controls limit who can modify models or training data.

- Performance reviews to compare predicted results with business outcomes.

- Archival protocols to maintain historical versions of both data and models.

Governance ensures that as teams or agencies change, institutional knowledge and operational reliability remain intact. It transforms machine learning from a one-time experiment into a sustainable SEO competency.

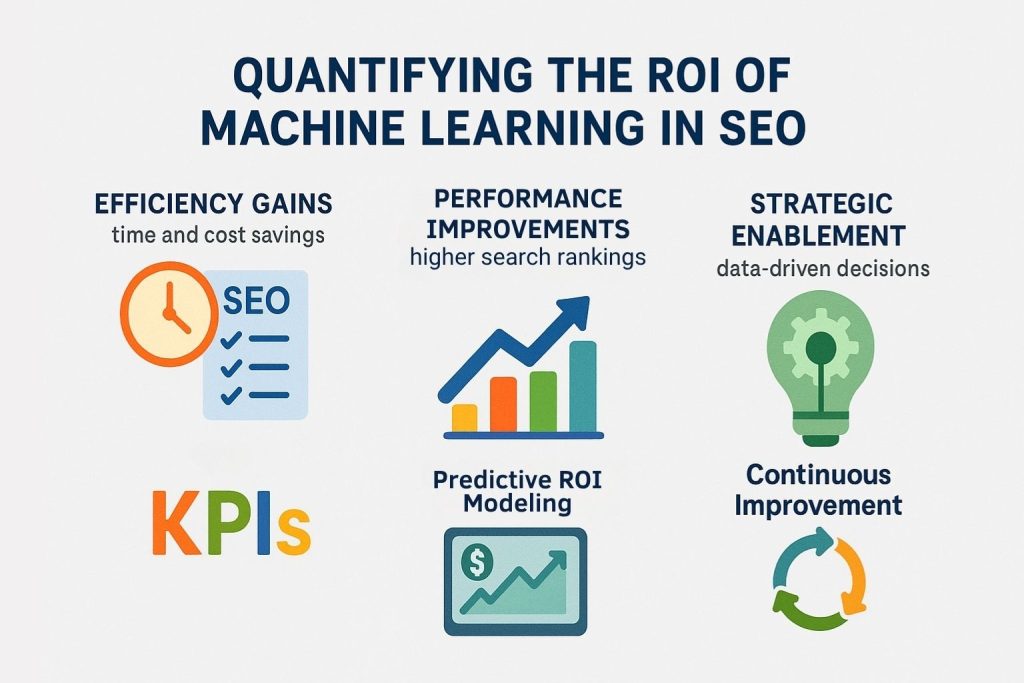

Quantifying the ROI of Machine Learning in SEO

Establishing the Measurement Framework

The adoption of machine learning in SEO requires significant investment in data infrastructure, talent, and experimentation. Measuring the return on these investments demands a structured framework that links machine learning outcomes directly to business value. According to Mordor Intelligence (2025), the global SEO services market is valued at approximately US $74.9 billion in 2025 and is projected to reach US $127.3 billion by 2030. Given that scale, organizations are under increasing pressure to justify SEO investments through data-driven, machine-learning-based ROI models rather than relying solely on subjective performance metrics.

A robust measurement model should include the following three key dimensions:

- Efficiency Gains: The time and cost reductions achieved through automation, such as content tagging, log analysis, or keyword clustering.

- Performance Improvements: The direct impact on organic visibility, CTR, ranking stability, and conversions.

- Strategic Enablement: The ability to execute new types of analysis, forecasting, or optimization previously impossible at scale.

This tri-layered framework ensures that machine learning investments are evaluated from both technical and organizational perspectives.

Defining Key Performance Indicators (KPIs)

To quantify ROI accurately, organizations must align technical metrics with business outcomes. The following KPIs are often used to measure the impact of ML SEO initiatives:

- Ranking predictability: The percentage reduction in ranking volatility after implementing predictive models.

- Crawl efficiency ratio: The proportion of total crawl budget allocated to high-value pages.

- Automation time savings: Hours reduced from repetitive manual audits and keyword clustering.

- Organic traffic uplift: The incremental increase in clicks or impressions attributed to ML-driven insights.

- Conversion optimization rate: The improvement in organic conversions linked to machine learning recommendations.

- Revenue attribution: The financial value derived from organic sessions improved by ML-optimized pages.

These KPIs provide quantifiable evidence that machine learning produces both operational and financial impact.

Modeling the Economic Impact

To move from KPIs to actual financial ROI, data teams can model cost-benefit scenarios. This involves estimating both the direct and indirect value generated by ML SEO initiatives.

Direct Impact:

- Increased Organic Conversions: Assign a dollar value to conversion rate improvements influenced by machine learning insights.

- Reduced Cost of Manual Labor: Measure savings from automated processes replacing human-driven repetitive tasks.

- Decreased Downtime or Error Cost: Quantify the impact of predictive maintenance or anomaly detection on site uptime and indexing efficiency.

Indirect Impact:

- Faster Decision Cycles: Value the ability to respond rapidly to algorithm updates.

- Better Cross-Channel Performance: Measure how predictive SEO enhances SEM, content marketing, and social targeting efficiency.

- Data Reuse and Integration: Assess the long-term value of reusable data pipelines that benefit multiple marketing functions.

A comprehensive economic model should aggregate these values into a unified ROI estimate over 6, 12, and 24-month intervals.

Attribution and Benchmarking

Attribution models are essential to differentiate between improvements caused by machine learning and those driven by other marketing efforts. Common approaches include:

- Incremental lift analysis: Comparing ML-optimized pages against control groups that follow traditional SEO methods.

- Time-series decomposition: Isolating trends, seasonality, and residuals to identify machine learning’s unique contribution.

- Benchmarking: Measuring your organization’s SEO metrics against competitors who have not yet adopted ML-driven workflows.

Establishing a baseline before implementing machine learning allows for clear visibility into how performance metrics evolve after deployment.

Predictive ROI Forecasting

Machine learning itself can be used to forecast ROI. By modeling historical campaign data, organizations can predict the potential future impact of optimization projects.

This involves training regression or simulation models on previous initiatives to estimate future performance uplift under similar conditions.

Predictive ROI modeling enables:

- Prioritization: Identifying which SEO initiatives will produce the highest return.

- Scenario testing: Comparing investment levels and expected payoffs for multiple strategies.

- Budget justification: Providing data-backed projections for executive approval or stakeholder reporting.

Predictive ROI frameworks turn SEO strategy into a quantifiable business science, strengthening alignment between technical and executive teams.

Integrating ROI Measurement into Continuous Improvement

The most advanced SEO operations treat ROI measurement as an ongoing process rather than a post-project report. Integrating ROI tracking into machine learning pipelines creates a feedback loop where every optimization informs the next cycle.

For example:

- The system logs estimated ROI per model deployment, correlating predicted outcomes with realized performance.

- Dashboards display real-time value generation metrics.

- Underperforming models trigger re-evaluation or retraining automatically.

By connecting performance tracking with model monitoring, SEO leaders can continuously prove the financial impact of their machine learning initiatives while ensuring the system keeps improving over time.

Translating ROI into Strategic Leverage

Quantifying ROI does more than justify investment; it establishes strategic leverage. When stakeholders see measurable outcomes tied to intelligent automation, machine learning transitions from an experimental project to an operational core capability.

Organizations that build this maturity:

- Operate SEO as a predictive, revenue-generating discipline, not a reactive marketing function.

- Attract greater cross-departmental support for scaling machine learning initiatives.

- Strengthen collaboration between marketing, data science, and executive leadership.

At this point, SEO becomes part of a broader intelligent marketing architecture, driving performance, efficiency, and innovation simultaneously.

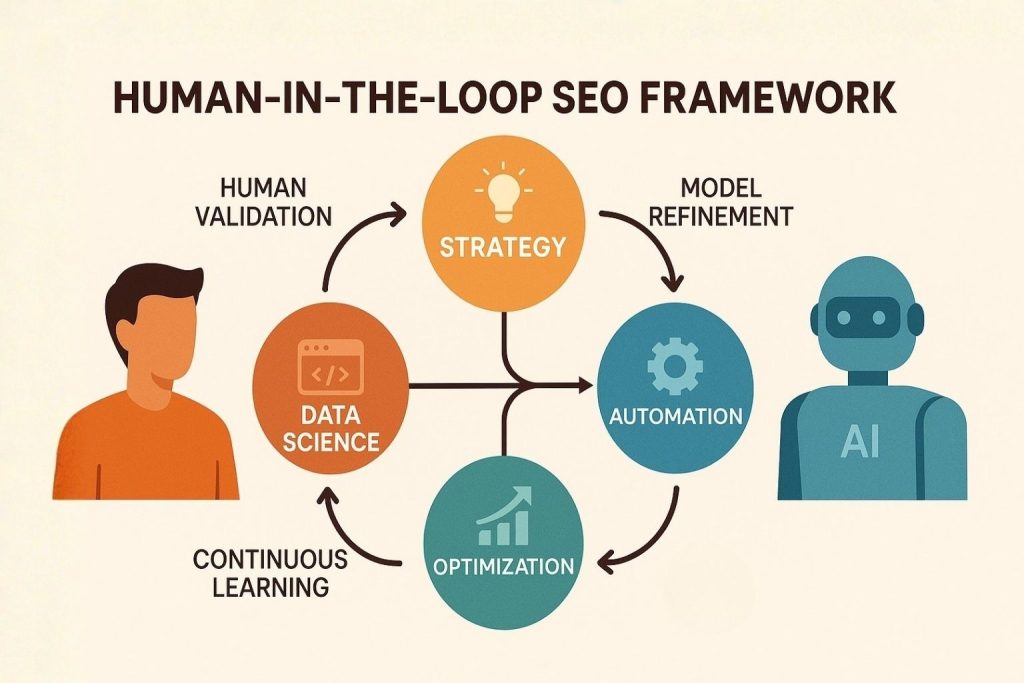

The Human-in-the-Loop SEO Framework

The Need for Human Oversight in Machine Learning SEO

Machine learning has automated many aspects of search optimization, but total automation is neither realistic nor desirable. SEO is influenced by evolving user intent, linguistic nuance, and cultural context elements that algorithms cannot fully interpret without human guidance.

A Human-in-the-Loop (HITL) framework ensures that machine learning in SEO remains accurate, ethical, and aligned with broader marketing strategy.

In this framework, algorithms handle repetitive or large-scale analytical tasks, while human experts validate assumptions, fine-tune models, and interpret ambiguous outputs. The collaboration between human judgment and algorithmic intelligence creates a feedback ecosystem where models continually improve under informed supervision.

Core Principles of the Human-in-the-Loop SEO Framework

A well-designed HITL framework for SEO rests on several operational pillars:

- Human Validation of Model Outputs:

Data scientists and SEO strategists review model-generated recommendations for contextual accuracy before implementation. For instance, an algorithm might classify a keyword as “low value,” but a strategist might recognize its relevance for brand visibility or strategic positioning.

- Iterative Model Refinement:

Human input is incorporated into model retraining cycles, ensuring that qualitative insights enhance quantitative predictions.

- Cross-Functional Collaboration:

Data engineers, SEO professionals, and content strategists collaborate continuously, forming a multidisciplinary team that balances creativity and analytics.

- Ethical and Brand Alignment:

Humans ensure that ML-generated optimizations reflect brand tone, comply with search guidelines, and respect user experience factors that algorithms cannot by itself enforce.

This structure merges the scalability of AI with the discernment of human expertise, ensuring both efficiency and authenticity.

Human Roles Across the ML SEO Lifecycle

Humans contribute at every stage of the machine learning SEO pipeline. Their intervention prevents model drift, bias, and strategic misalignment.

- During Data Collection: Humans evaluate data relevance and eliminate noisy or biased samples that could distort training.

- During Feature Engineering: Experts identify variables that reflect true SEO dynamics, for example, how topical authority evolves or how brand search influences organic CTR.

- During Model Training and Evaluation: Specialists test assumptions, validate outputs, and challenge anomalies the system might overlook.

- During Deployment and Optimization: SEO professionals interpret insights, deciding when to follow or override algorithmic recommendations.

This human involvement ensures machine learning remains a tool for augmentation rather than substitution.

Enhancing Interpretability and Accountability

Interpretability is critical for professional SEO teams managing ML-driven systems. While algorithms may find patterns in ranking or behavioral data, human experts must explain and justify those findings to stakeholders.

Practical techniques include:

- Creating explainability dashboards that visualize model decision pathways and feature influence.

- Maintaining review logs where SEO managers approve or reject ML recommendations.

- Conducting peer audits for high-impact algorithmic decisions to prevent oversight or unintended consequences.

This process builds organizational accountability and fosters trust between technical teams and executives.

The Balance Between Automation and Human Strategy

Automation is valuable, but it should never replace strategic reasoning. Machine learning can analyze patterns faster than any human, yet it cannot define strategic objectives or interpret creative nuance.

Professionals determine:

- Which markets or topics align with long-term brand positioning.

- When to prioritize visibility over conversion, or authority over volume.

- How to craft narratives that resonate with both algorithms and audiences.

Human strategic direction anchors automation within meaningful objectives, ensuring that technical performance supports holistic brand growth.

Scalability Through Human-Machine Collaboration

The goal of Human-in-the-Loop SEO is scalability with precision. As machine learning handles data-heavy processes like clustering millions of keywords or analyzing vast link graphs, humans refine and interpret outcomes, guiding continual improvement.

Key outcomes include:

- Reduced bias: Human review eliminates algorithmic blind spots in language and intent interpretation.

- Faster adaptation: Expert feedback accelerates model retraining when search patterns or content frameworks change.

- Higher trust: Stakeholders rely on recommendations that are both data-backed and contextually sound.

Organizations that institutionalize this collaboration transform SEO from an automated process into an intelligent ecosystem of co-evolving human and machine intelligence.

When Expert Partnerships Add Value

Not all organizations have in-house capacity for full Human-in-the-Loop SEO systems. In such cases, collaboration with specialized SEO or data-focused agencies can accelerate deployment. These partners bring the engineering expertise, domain experience, and operational frameworks required to build sustainable human-machine collaboration at scale.

An external team can establish model governance, implement interpretable ML systems, and train internal staff on data-driven workflows. The result is a balance between automation efficiency and strategic control, the hallmark of mature SEO operations.

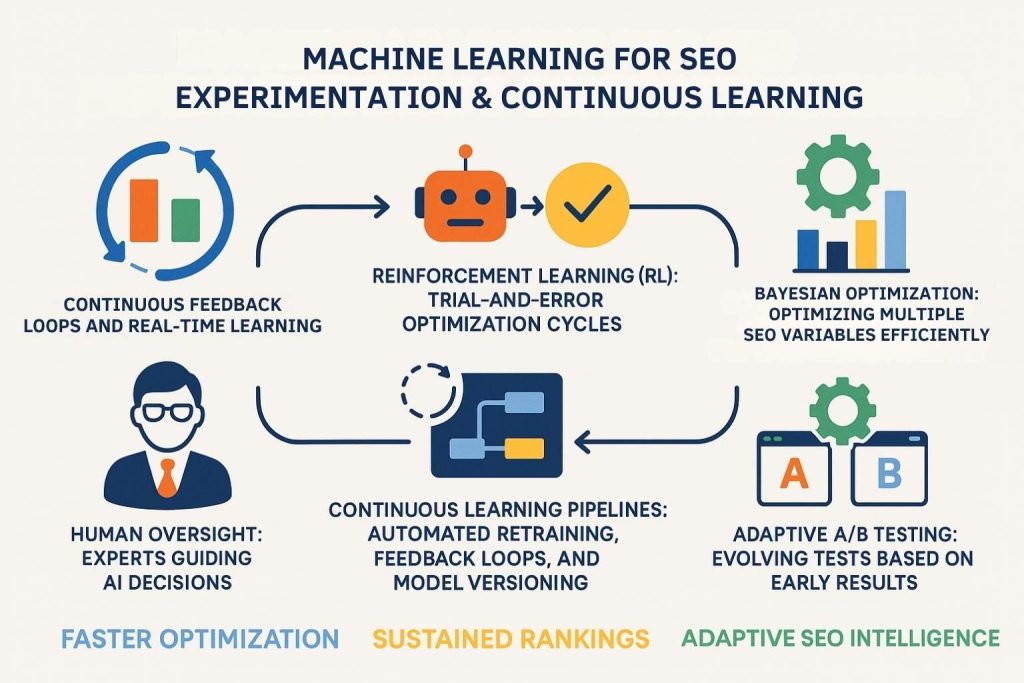

Machine Learning for SEO Experimentation and Continuous Learning

The Evolution from Static Optimization to Continuous Experimentation

Traditional SEO approaches rely on discrete testing cycles: implement, measure, adjust, repeat. Machine learning transforms this process into a continuous experimentation framework where models learn dynamically from each iteration.

Rather than waiting weeks or months to assess outcomes, ML-driven systems analyze results in real time, updating recommendations as soon as new data becomes available.

This shift enables:

- Faster feedback loops for on-page and off-page optimizations.

- Automated hypothesis validation, where models test multiple configurations simultaneously.

- Ongoing adaptability in response to search algorithm updates and user behavior changes.

Continuous learning ensures that SEO strategies remain agile and resilient, capable of self-improvement through real-world interaction data.

Applying Reinforcement Learning for SEO Testing

Reinforcement learning (RL) is a branch of AI that focuses on learning through trial and error. In SEO, RL models can test multiple optimization strategies simultaneously and identify the configurations that yield the highest performance metrics.

Examples of RL applications in SEO experimentation include:

- Meta title and description optimization: Testing combinations of phrasing, structure, and length to maximize CTR.

- Snippet selection: Determining which schema or structured data type generates the best visibility in SERPs.

- Internal link structure adaptation: Dynamically adjusting link priorities based on traffic flow and engagement.

- Content module testing: Evaluating which on-page components improve dwell time and conversions.

By defining rewards (e.g., improved CTR, higher ranking, reduced bounce), the RL model iteratively converges toward optimal strategies. This removes guesswork and speeds up the learning cycle significantly.

Bayesian Optimization for SEO Parameter Tuning

SEO decisions often involve trade-offs between multiple variables, such as content length versus load speed, or keyword density versus readability. Bayesian optimization provides a mathematically grounded method for navigating these trade-offs efficiently.

In SEO experimentation:

- The system treats each optimization variable (title length, heading density, etc.) as a parameter.

- The Bayesian model builds a probabilistic understanding of how each parameter affects outcomes.

- It then tests new configurations likely to yield the best improvement based on prior evidence.

This method significantly reduces the number of tests required to find the most effective configuration. It’s particularly useful for multivariate testing in complex SEO environments where numerous interacting factors influence performance.

A/B Testing Augmented by Machine Learning

While A/B testing remains a cornerstone of SEO experimentation, machine learning enhances its sophistication. Instead of running simple binary tests, ML models can:

- Analyze multivariate outcomes simultaneously across different audience segments.

- Detects contextual performance differences, such as how a change affects mobile vs. desktop users.

- Adjust sampling dynamically based on early performance signals, minimizing traffic wasted on underperforming variants.

This creates adaptive A/B testing systems that evolve as data accumulates, providing faster and more reliable results.

Continuous Learning Pipelines for SEO Models

A mature SEO ML infrastructure integrates continuous learning pipelines, ensuring that each optimization contributes to cumulative intelligence. These pipelines collect data from user interactions, SERP outcomes, and performance metrics to retrain models automatically.

Key features include:

- Automated retraining triggers: Initiated when ranking fluctuations exceed defined thresholds.

- Model version control: Maintaining historical models to evaluate improvements and regressions.

- Feedback integration: Using user engagement data (CTR, dwell time, conversion rate) as direct reinforcement signals.

- Closed-loop optimization: Where predictions directly inform the next cycle of testing and iteration.

Continuous learning transforms SEO from a cyclical process into a perpetual intelligence system capable of refining itself indefinitely.

Experimentation at Scale

For large organizations, running thousands of concurrent SEO tests manually is impossible. Machine learning enables experiment orchestration at scale by managing multiple hypothesis streams simultaneously.

With centralized experimentation frameworks:

- Models prioritize tests based on potential impact and data confidence.

- Automated monitoring flags experiments producing statistically significant results early.

- Historical test data feeds back into a repository that enhances future model accuracy.

This scalability ensures that even complex websites with extensive URL inventories can continuously evolve in sync with algorithmic changes.

The Role of Human Curators in Continuous SEO Learning

Although ML systems automate testing and optimization, humans remain essential to interpret outcomes and guide overall direction. Human experts identify whether certain “optimal” configurations align with brand strategy, user experience standards, or regulatory constraints.

Their involvement guarantees that:

- Automation remains consistent with long-term marketing goals.

- Experiments respect editorial voice and design constraints.

- Unexpected results are properly validated before deployment.

The best-performing SEO organizations blend machine precision with expert judgment, achieving both scale and authenticity.

Benefits of Continuous Learning in SEO

Implementing ML-based continuous learning brings measurable advantages:

- Sustained ranking stability: Early detection and correction of performance degradation.

- Accelerated optimization: Reduced time between hypothesis and insight.

- Knowledge compounding: Each experiment contributes to organizational learning.

- Algorithmic adaptability: Models retrain as search engines evolve, preventing obsolescence.

Continuous learning ensures that SEO strategies remain evergreen, improving efficiency and performance with each iteration.

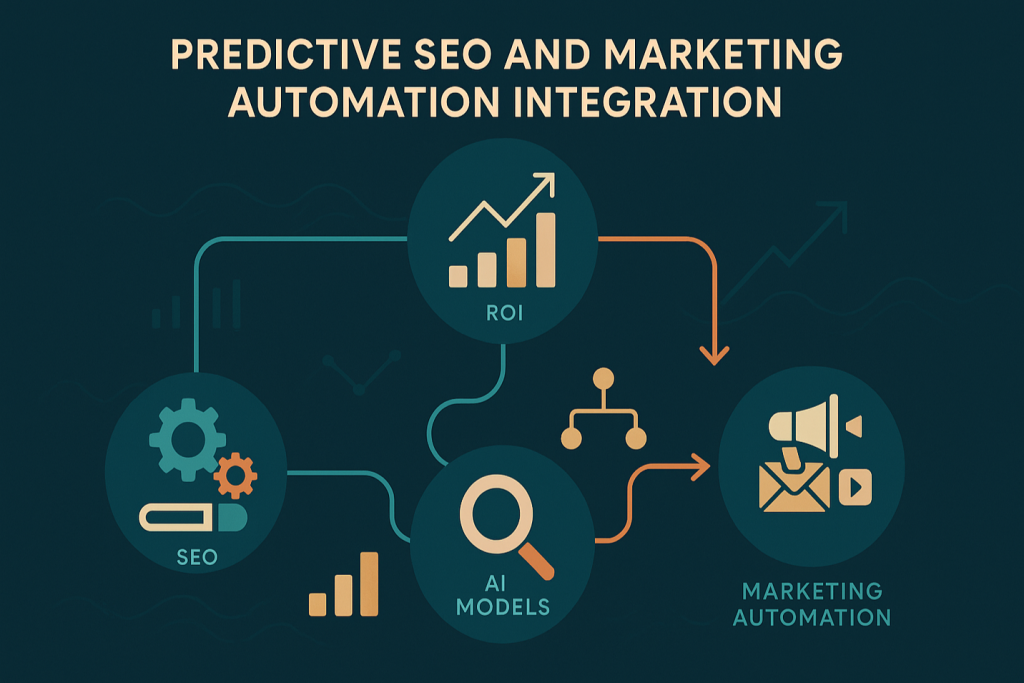

Cross-Disciplinary Convergence SEO, Machine Learning, and Marketing Automation

Unifying Data Across Channels

Modern marketing ecosystems operate on integrated data flows rather than isolated channels. Machine learning in SEO becomes exponentially more powerful when connected with broader marketing automation systems.

By unifying SEO data with analytics, CRM, and advertising platforms, teams can analyze not only how users find content but also how those users behave after discovery.

An integrated architecture allows:

- Bi-directional data exchange between SEO analytics and marketing automation platforms.

- Behavioral segmentation based on both organic search and conversion journey metrics.

- Attribution modeling that links keyword clusters to customer lifetime value.

- Dynamic content recommendations that personalize landing experiences according to search intent.

When SEO signals feed into the same ML models that guide email, paid search, or personalization campaigns, organizations achieve a 360-degree view of user engagement.

Machine Learning as the Intelligence Layer of Marketing Automation

Marketing automation platforms rely on rule-based workflows to deliver messaging and content. Machine learning replaces rigid rules with adaptive intelligence that predicts user needs and intent.

In practice, ML-powered automation systems can:

- Forecast optimal timing and channel distribution for organic and paid content.

- Identify cross-channel conversion patterns, revealing where SEO drives downstream engagement.

- Automatically trigger content or offer personalization based on predicted user value segments.

- Generate creative optimization insights by correlating keyword intent with message resonance.

This evolution transforms automation from static scheduling into a self directed optimization engine capable of learning from every user interaction.

SEO as the Data Source for Predictive Marketing

SEO is uniquely positioned at the top of the customer acquisition funnel. Every query represents an intent signal that can inform broader marketing activities. Machine learning interprets these signals to forecast consumer demand, uncover emerging topics, and guide campaign strategy.

For example:

- Topic trend analysis: Identifying rising query clusters before competitors notice them.

- Intent-to-conversion mapping: Training models to predict which search intents are most likely to result in purchases or subscriptions.

- Audience expansion: Using entity embeddings from SEO data to find similar high-value segments for paid campaigns.

These insights flow into marketing automation systems, enabling data-driven creativity that anticipates market behavior rather than reacting to it.

Predictive Personalization Using SEO and Machine Learning

Combining SEO and machine learning creates the foundation for predictive personalization, delivering tailored content experiences before users articulate their needs.

By linking search behavior with CRM and on-site engagement data, ML models can:

- Predict which content categories a visitor is likely to explore next.

- Dynamically adjust landing page layouts and calls-to-action.

- Recommend related resources or products aligned with inferred interests.

- Score leads or visitors based on predicted conversion probability.

This unification of organic discovery and personalized delivery reduces friction, boosts engagement, and increases overall conversion efficiency.

Closed-Loop Measurement and Optimization

In a cross-disciplinary ecosystem, data flows continuously between SEO analytics and marketing automation. Machine learning systems evaluate this flow to optimize campaign timing, creative choices, and keyword strategy simultaneously.

A mature closed-loop framework includes:

- Shared KPIs between SEO, paid media, and CRM teams.

- Unified data warehouses where all performance signals are aggregated and normalized.

- Feedback systems that automatically adjust SEO priorities based on revenue contribution rather than rankings alone.

- Cross-channel reinforcement learning, where each touchpoint teaches the model how to improve future user journeys.

By integrating SEO into this closed loop, machine learning becomes the decision-making engine for the entire marketing lifecycle.

Strategic Implications for Organizations

Integrating machine learning, SEO, and marketing automation is not a technical exercise; it is a transformation of organizational structure.

To realize the benefits, teams must evolve toward interdisciplinary collaboration, aligning marketing, data science, and product strategy.

Key strategic shifts include:

- From siloed departments to data-driven ecosystems: Every channel informs and amplifies the others.

- From reactive optimization to predictive orchestration: ML systems anticipate opportunities and allocate resources proactively.

- From tactical reporting to strategic intelligence: SEO becomes a forecasting engine that shapes high-level decisions.

Organizations that master this convergence achieve unparalleled efficiency and visibility, positioning SEO as a central pillar of their intelligent marketing architecture.

The Role of Strategic Partnerships

While in-house teams can implement foundational integrations, scaling a unified ML-driven marketing ecosystem requires advanced expertise. Specialized partners or agencies bring the data engineering, AI modeling, and multi-channel strategy needed to orchestrate these systems holistically.

Such collaboration accelerates deployment, ensures data integrity, and provides the cross-domain insight necessary to translate complex algorithms into measurable business results.

Final Reflections: Machine Learning as the Operating System of SEO

Machine learning and SEO are no longer separate domains. They coexist as parts of a single system that governs how information is discovered, ranked, and consumed. The integration of machine learning in SEO has elevated the field from manual optimization to intelligent orchestration, where adaptive algorithms continually refine digital performance.

Success now depends on understanding search not as a set of rules but as a dynamic network of learning systems. The professionals who thrive are those who can interpret, model, and align with these systems. As search continues to evolve, the collaboration between human creativity and algorithmic intelligence will define competitive advantage.

Machine learning SEO frameworks will continue to expand the limits of what optimization means, emphasizing precision, adaptability, and relevance. Organizations that invest in this understanding and partner strategically when needed will position themselves at the forefront of digital visibility and sustained growth.

RiseOpp’s Vision and the Next Phase of Machine Learning in SEO

At RiseOpp, we believe that mastering the mechanics of machine learning in SEO is essential, but equally important is the ability to execute it at scale. As a leading Fractional CMO and SEO services company, we partner with both B2B and B2C organizations across multiple industries to bring this vision to life. Our teams deploy proprietary frameworks built around our Heavy SEO methodology that enable ranking for tens of thousands of keywords over time, integrating deeply with branding, messaging, and multi-channel execution.

When you engage with us, we don’t simply hand over reports; we act as your extended marketing leadership. Within our Fractional CMO services, we cover everything from strategic development to team hiring and execution across SEO, GEO, PR, Google Ads, Meta, LinkedIn, TikTok, email, affiliate, and yes, the kind of data-driven, AI-enabled workflows we discuss in this article.

If you’re looking to move beyond traditional tactics and build an SEO architecture powered by machine intelligence, creative excellence, and measurable business outcomes, let’s connect. Reach out today for a complimentary consultation and discover how we can elevate your search performance and marketing maturity together.

Comments are closed