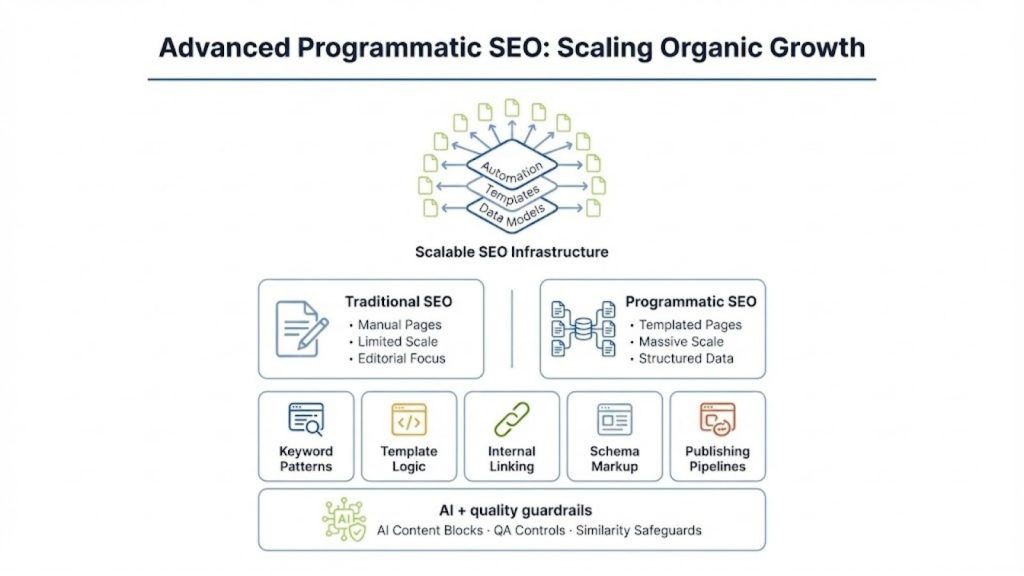

- Programmatic SEO uses templates, structured data, and automation to generate thousands of intent-aligned pages from repeatable keyword patterns.

- Success depends on data modeling, scalable publishing systems, internal linking, schema, and template-level optimization rather than individual page tweaks.

- AI should generate modular content blocks with strict QA, similarity controls, and human oversight to avoid thin or duplicate pages.

As someone who has worked with scalable content systems across industries, SaaS, marketplaces, e-commerce, and publishing, I want to break down the advanced strategies behind programmatic SEO. This isn’t theory or fluff. It’s the real-world framework I’ve seen drive millions of incremental organic visits with fewer marginal resources.

We’re not talking about spinning pages or bloating your site. We’re talking about purpose-built, search-driven, data-backed content systems, designed to scale intelligently.

Let’s get into it.

What Is Programmatic SEO, and How It Actually Differs From Traditional SEO

I’ve noticed many teams treat programmatic SEO as a plug-and-play content hack. It’s not. It’s a fundamentally different operating model from traditional SEO, and one that demands a different mindset, team structure, and technical setup.

Here’s the key difference:

Traditional SEO is about crafting individual high-quality pages, one by one. Programmatic SEO is about building a scalable infrastructure that can generate thousands of high-value pages from structured data and reusable logic.

You create templates instead of writing pages. You analyze patterns instead of chasing one keyword at a time. You invest up front in systems that can create long-term leverage.

Here’s a breakdown of how they differ:

| Traditional SEO | Programmatic SEO | |

| Production | Manual, hand-written pages | Templated pages generated from datasets |

| Scale | 10s to 100s of pages | 1,000s to 100,000s+ |

| Content | Unique articles, deep editorial | Structured data + generated content |

| Use Cases | Guides, blog posts, editorial content | Category pages, integration pages, location pages |

| Best for | Competitive “head” terms | Long-tail queries and large taxonomies |

To do this right, you’ll need to commit to building something sustainable. That includes a well-modeled dataset, a publishing pipeline, strict quality controls, and analytics that let you optimize at the template level.

The Goals of Programmatic SEO: What You’re Really Building Toward

When I lead programmatic SEO projects, I always ask the client: What are you optimizing for, traffic, conversions, coverage, or defensibility? Your answer will shape every decision downstream, from data architecture to content strategy.

Here are the most common (and advanced) goals I’ve seen in the field:

1. Capture Long-Tail Search Demand at Scale

This is the most obvious but still the most powerful. You can’t manually write pages for every query like “best CRM for lawyers in Chicago” or “black midi skirts under $40”, but you can generate them from a dataset.

These queries often convert better than head terms, because they represent specific, high-intent searches.

2. Create Conversion-Optimized Landing Pages Automatically

For many SaaS or e-commerce companies, programmatic SEO becomes a way to generate targeted landing pages for bottom-funnel queries like:

[Competitor] alternative

Best [tool] for [use case]

Top [product category] in [location]Each of these can be structured into a template and generated dynamically, with the right combination of data, copy, and CTAs.

3. Build a Competitive Content Moat

This is where things get interesting. When you execute programmatic SEO before your competitors do, and you do it well, you lock in a structural advantage.

Think of Zapier’s integration pages or NerdWallet’s credit card comparison pages. If you’re the first to scale intelligently, you make it exponentially harder for others to catch up.

4. Expand Topical Authority and Internal Link Depth

I’ve seen programmatic SEO used to develop “supporting” content around pillar themes. These pages target the full taxonomy of variations, locations, categories, and intents, reinforcing the authority of core pages through internal linking.

Think:

- “Wedding venues in {city}”

- “Best cameras for {scenario}”

- “How to integrate {tool A} with {tool B}”

This is not just about page count. It’s about topical breadth and semantic interlinking.

5. Personalize by Segment, Location, or Intent

At the advanced level, I’ve built systems that generate pages not just by keyword, but by user persona:

- Industries (e.g., “CRM for Law Firms”)

- Roles (e.g., “Time Tracking for Freelancers”)

- Locations (e.g., “Business Checking in Austin, TX”)

Each one becomes a hyper-relevant landing experience, especially when paired with dynamic data and intent-matched CTAs.

Technical Foundations: What You Need Before You Write a Single Page

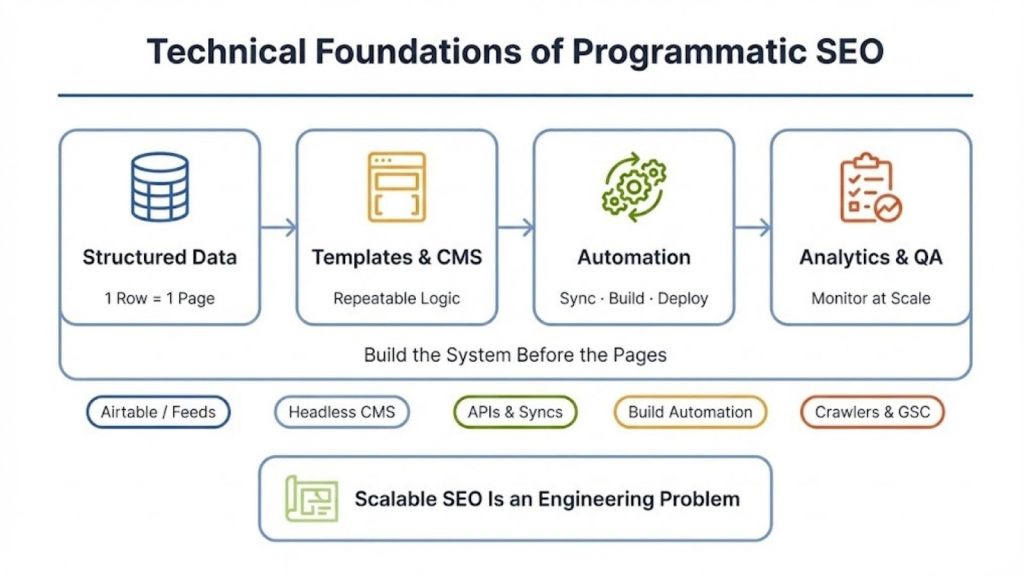

Programmatic SEO is 10% copy, 90% system design, relying heavily on automation-driven workflows that scale content creation, publishing, and updates. Before a single word gets generated or published, you need the right scaffolding.

Here’s what I recommend:

1. A Structured Data Source

This is your raw material. It could be:

- An Airtable base

- A product feed

- A city/location database

- A list of software integrations

- A spreadsheet with category attributes

Each row = one page. The columns hold variables: title, description, image URL, product type, location, etc.

And the quality of this data determines everything downstream. Incomplete, messy, or low-signal data = bad pages.

2. A Templating and Publishing Engine

Once you have your data, you need a way to turn each row into a real, crawlable, indexable webpage.

Options include:

- Webflow CMS (connected to Airtable via Whalesync or Make.com)

- Headless CMS (e.g., Sanity, Strapi) + Next.js or Nuxt

- Static site generators (e.g., Hugo, Jekyll, Gatsby, Eleventy)

- Custom scripts that generate HTML or markdown

No-code tools are fine up to a point (maybe 10k–20k pages), but at higher volumes or custom logic needs, I move to code-based systems.

3. Automation and Syncing Logic

You’re going to need automation at multiple points:

- Syncing data changes to your CMS

- Rebuilding and deploying pages

- Monitoring crawl/index status

- Integrating AI content generation, if used

Depending on your stack, you might use:

- Zapier or Make

- Whalesync for two-way Airtable sync

- Custom scripts with Airtable API or Google Sheets API

- CRON jobs or GitHub Actions for site builds

Automation isn’t a bonus, it’s the only way to keep hundreds or thousands of pages fresh and live without losing your mind.

4. Analytics and QA Stack

More pages = more risk. You’ll need:

- Crawlers like Screaming Frog to find broken links, duplicates, or missing tags

- Search Console API to track indexation at scale

- Analytics dashboards to compare performance by template or category

- Optional: server log analysis to understand crawl behavior

Also: document your system. Know where the data lives, how it’s used, and what triggers builds or updates.

Step-by-Step Programmatic SEO Implementation Workflow

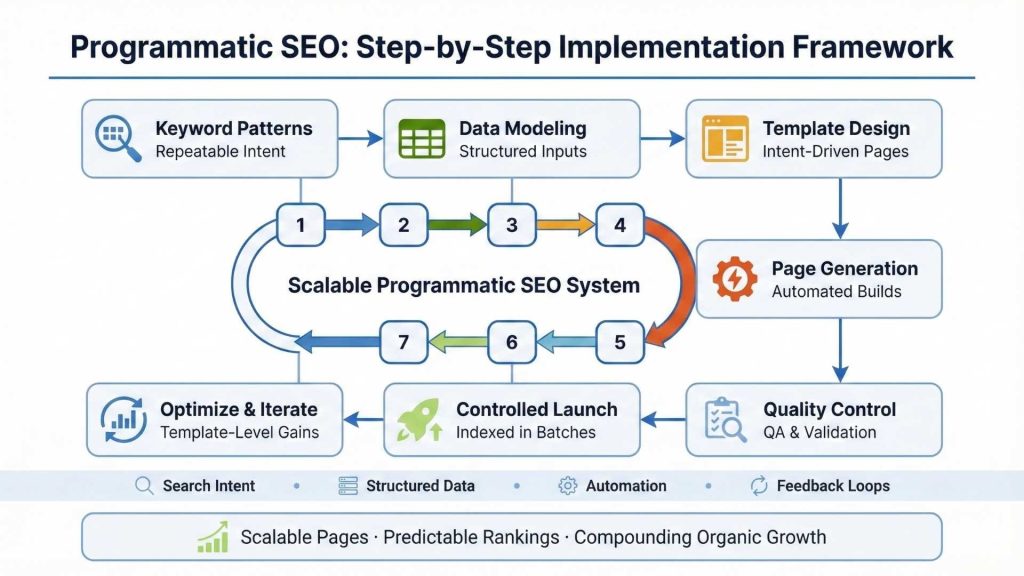

This is the framework I use when implementing programmatic SEO, whether it’s for a travel marketplace, a SaaS integration directory, or a product category system in e-commerce. It doesn’t matter what the vertical is; this process scales because it’s built on first principles.

I’m going to walk you through each phase in detail.

Step 1: Identify Repeatable Keyword Patterns

The entire system starts with keyword pattern discovery, not just keyword research.

Don’t think in terms of isolated queries like “best photo editing software.” Think in scalable patterns like:

best [product] for [use-case]

[X] alternatives

[product] in [city]

how to connect [tool A] to [tool B]When I worked with a SaaS client, we reverse-engineered thousands of “how to integrate” and “best for X” variations from Ahrefs and internal search data. This gave us a taxonomy to scale against.

Tools I use here:

- Ahrefs or Semrush for pattern discovery

- Google Search Console to reverse engineer what you’re already ranking for

- Keyword Insights or LowFruits to cluster variations

- Internal site search logs (hugely underrated)

At this stage, I’m asking:

- Is the query pattern valid across 50+ variations?

- Does the intent stay consistent across those variations?

- Do I have, or can I get, the data to answer each variation?

If the answer is yes to all three, I lock it in as a scalable keyword theme.

Step 2: Build and Structure the Dataset

Next, I model the data.

I’m not just looking for CSVs to upload. I’m architecting a semantic database that powers templated content. This means:

- One row per page

- One column per content element (title, intro, feature list, CTA label, etc.)

- Bonus columns for metadata: SEO title, meta description, slug, image alt, etc.

For example, if I’m creating city-based landing pages for a marketplace, my columns might look like:

- City Name

- State

- Population

- Featured Listings

- Custom Intro Text

- SEO Title

- Meta Description

- URL Slug

If I’m building a SaaS integrations directory, it might be:

- Tool Name

- Integration Category

- Benefits Summary

- Example Use Case

- Top Use Case Links

- Logo URL

You get the idea. The more granular and clean your dataset, the more control you have over the final page output.

I use for most MVPs because it lets me work with relational data and formulas, and has excellent integrations.

Step 3: Design the Page Template with Intent in Mind

Now comes the part that feels like “writing,” but is really template engineering.

Each page type needs:

- A clear H1 that aligns with the search query

- A compelling first paragraph that speaks to intent

- Data-driven sections (e.g., lists, comparisons, pros/cons, etc.)

- Trust elements (logos, ratings, reviews)

- Internal links to related pages

- Call-to-action, where relevant

The best programmatic pages don’t feel like they were mass-produced, even though they were, because user experience, layout, and intent matching are built directly into the template. They anticipate the reader’s intent and provide a satisfying, frictionless experience.

Here’s a common structure I use:

- Intro paragraph personalized to the query

- Data table or curated list

- Explanation or summary paragraph

- FAQs or contextual guidance

- Link to related pages

- Call to action

This can all be generated from data, but only if your template is flexible and your dataset rich.

Bonus: include schema.org markup at this stage. You can automate FAQ schema, product schema, location schema, and more across every page, directly from your data.

Step 4: Generate Pages via Automation

Once the template is ready and the data is structured, I trigger the build process.

Here’s where your stack matters. Depending on your setup:

- In Webflow, I’ll sync Airtable to the CMS via Whalesync or Make.com

- In Next.js, I’ll pull from a JSON or Airtable API at build time using getStaticProps

- In Jekyll/Hugo, I’ll loop over the data files and render HTML pages

You can also batch-generate pages in Google Sheets + GPT and then push them via API or CMS import if you’re running a no-code stack.

The build process needs to be:

- Repeatable (so you can refresh content)

- Resilient (can handle partial failures or missing data)

- Trackable (log what was published when)

Once everything’s rendered, I’ll publish in preview mode and manually QA a sample set before going live.

Step 5: Quality Control and Spot Checks

Here’s where most programmatic SEO projects fail, they skip quality control.

I run a few types of tests:

- Content review: read 10-20 random pages across different categories

- Screaming Frog crawl: check for missing titles, headings, links, or broken images

- Duplicate content scan: run similarity analysis across the set

- Accessibility check: headings, alt text, keyboard navigation

- Mobile UX test: some templates break on mobile when scaled

I’ve built internal tools for larger projects that auto-flag pages with under X words or missing primary keywords.

Step 6: Launch in Controlled Batches

Don’t launch 10,000 new URLs in one day. Google’s going to crawl them slowly anyway, and you’ll just create noise.

I prefer to:

- Launch 50–100 pages first (representative of different variations)

- Submit sitemap to Search Console

- Track indexation, rankings, and user behavior

- Scale up once signals are positive

Once you’ve validated indexation and engagement, ramp up your publishing volume.

Make sure each new batch is:

- Added to sitemaps

- Linked internally from category hubs

- Discoverable by Googlebot

Step 7: Monitor, Learn, and Iterate

This is not a one-and-done project. It’s a system.

I track performance by template, not just by page, using segmented SEO analytics that reveal patterns across thousands of URLs. I want to know:

- Which templates have the highest CTR and the lowest bounce?

- Which keyword patterns are driving conversions?

- Which types of pages are not being indexed?

I’ll tweak intros, add more FAQs, change how listings are displayed, all at the template level, and redeploy.

I also watch for “zombie pages” that Google crawls but doesn’t index. That tells me I may have thin or redundant content in some page sets.

Every few weeks, I re-crawl, re-score, and refine. That’s what turns a one-time project into a self-improving SEO machine.

Tools, Platforms, and Systems for Programmatic SEO Implementation

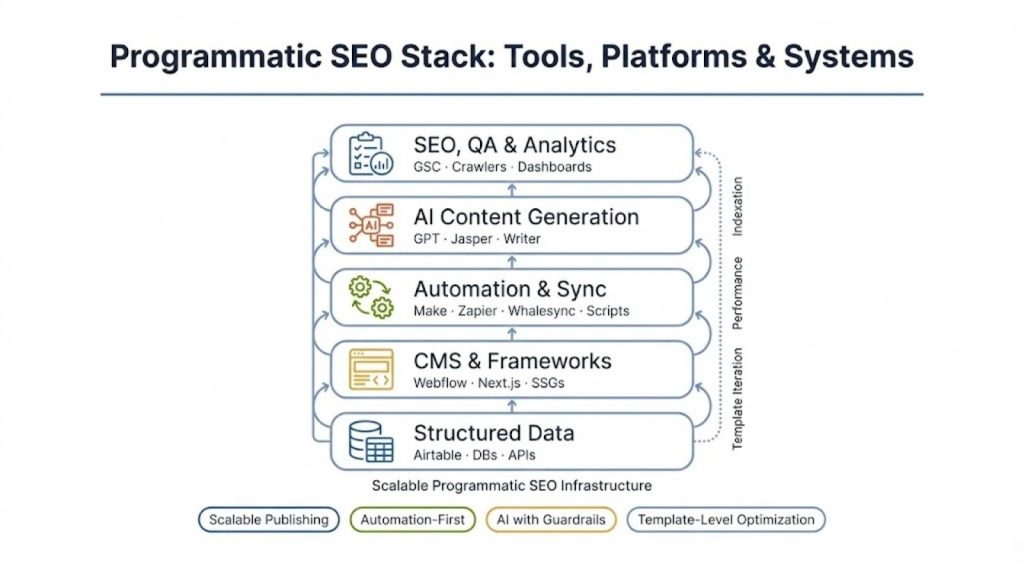

At the advanced level, you can’t rely on spreadsheets and copy-paste workflows. You need a well-integrated stack that supports scale, performance, and maintainability. Over the years, I’ve tested nearly every combination of CMS, database, generator, and sync platform, and here’s what I recommend for different stages and use cases.

CMS and Site Frameworks

The content management system (CMS) or frontend framework is your rendering engine. This is where data turns into real, crawlable pages.

1- Webflow CMS

Ideal for: Designers, MVPs, <10k pages

Pros:

- Visually intuitive for designers

- CMS supports up to 10k items

- Can be integrated with Airtable, Make, or Whalesync

- Good SEO control: slugs, metadata, schema via attributes

Cons:

- Not made for high-volume publishing

- CMS item limits can be restrictive

- No true staging environments for complex logic

If you’re starting out or want to validate a programmatic structure fast, Webflow is unbeatable. I’ve built integration directories, location pages, and use-case matrices in it with great success, until we hit the scaling ceiling.

2- Next.js (or Nuxt.js for Vue)

Ideal for: Scalable, headless builds with full control

Pros:

- Pull in data at build time from any source

- Full control over markup, schema, and routing

- Blazing fast performance with ISR or SSG

- Works great with Vercel or Netlify

Cons:

- Requires engineering resources

- Data freshness depends on your build pipeline

When I want full flexibility, I go with a headless CMS (like Sanity or Strapi) + Next.js and deploy via Vercel. This combo lets me scale to hundreds of thousands of pages while keeping page speed, indexability, and design quality high.

3- Static Site Generators (Jekyll, Hugo, Eleventy)

Ideal for: Developers, SEO-heavy content systems

Pros:

- Fast to generate

- Easy to host on GitHub Pages or Netlify

- Markdown-friendly

- Great version control

Cons:

- Limited CMS-like editing experience

- Not suited for dynamic user interactions

These are great if you’re technical and want something fast, lightweight, and low-cost. I’ve used Jekyll to run long-tail content portals with nearly zero overhead.

Databases and Data Sources

At the heart of every programmatic SEO system is structured data. Here’s what I use, depending on the complexity.

1- Airtable

Pros:

- Easy to use and visualize relationships

- Formula columns = dynamic content logic

- Integrates with most automation tools

- API access is strong

I use Airtable for MVPs, and I even stick with it long-term if the data model isn’t too complex. Think of it as Google Sheets with real relational capabilities.

2- PostgreSQL / MySQL

When working at true scale (e.g., e-commerce catalogs, location directories), I’ll move the data to a proper relational DB. Paired with a backend or headless CMS, this gives me full flexibility with joins, filters, and advanced queries.

3- External APIs

Sometimes, your data isn’t static. I’ve used APIs for:

- Real-time pricing or availability

- Weather, location, or local events

- App directories (e.g., pulling from Shopify API)

You can cache API data to hydrate your content and avoid latency issues during builds.

Automation and Syncing

Automation is essential. If you’re doing anything manually across more than 100 pages, you’re wasting resources and introducing errors.

1- Make (formerly Integromat)

My favorite for mid-level automation. It can:

- Trigger workflows from Airtable updates

- Push data into Webflow or other CMSs

- Handle conditional logic better than Zapier

2- Zapier

More beginner-friendly, great for:

- Simple CMS updates

- Sheet syncs

- Trigger-based content updates

3- Whalesync

Best for two-way syncs between Airtable and Webflow. It keeps your CMS items up to date with Airtable records.

4- n8n

More advanced and flexible, ideal for teams that need deeper control over automation workflows. Great for:

- Complex multi-step automations

- API-driven integrations

- Custom logic and data transformations

- Syncing multiple tools and systems at scale

5- Custom Scripts + CRON

For more advanced builds, I’ve written custom Node.js scripts that:

- Pull from APIs

- Process GPT-generated content

- Write to a Git repo

- Trigger builds or CMS updates

With CRON or GitHub Actions, this becomes your own fully automated pipeline.

AI + Content Generation Tools

You don’t need to write every sentence by hand, but you do need to control quality and voice. Here’s how I integrate AI responsibly.

1- OpenAI / GPT (via API)

Used for:

- Generating intro paragraphs

- Writing meta descriptions

- Filling in FAQ content

- Summarizing comparison data

I always run GPT outputs through logic and filters. For example:

- Use prompt engineering to keep tone consistent

- Apply formulas to ensure keywords are present

- Review or post-edit where needed

2- Writer.com / Jasper / Copy.ai

Useful for:

- Teams that want non-technical interfaces

- Brand voice control (Writer is best here)

- Templates for scalable content types

I prefer using GPT via API for flexibility and control, but tools like Jasper are helpful when I’m enabling non-technical contributors.

SEO, QA, and Analytics Tools

You’re managing large volumes of pages. You can’t afford to guess what’s working or breaking.

1- Screaming Frog / Sitebulb

Must-haves for crawling your generated pages. I use them to:

- Detect missing tags, errors, and redirects

- Spot thin content

- Monitor internal linking depth

2- Google Search Console (Bulk API)

This is where you see:

- Indexation status per page

- Impressions and clicks by template

- Coverage issues or crawl anomalies

I integrate this data with Looker Studio dashboards for templated views.

3- Looker Studio / Data Studio

Used for building dashboards that track:

- Page performance by keyword pattern

- Conversion rates by template

- Indexation trends

You need this to manage SEO at scale. Otherwise, you’re flying blind.

Bonus Tools

- Hexomatic: For bulk scraping or enrichment

- SheetBest: Turn Google Sheets into an API

- Parabola.io: Visual data workflows for non-coders

- Prerender.io: For rendering JS-heavy sites for bots

- Cloudflare Rules: For edge SEO and redirects at scale

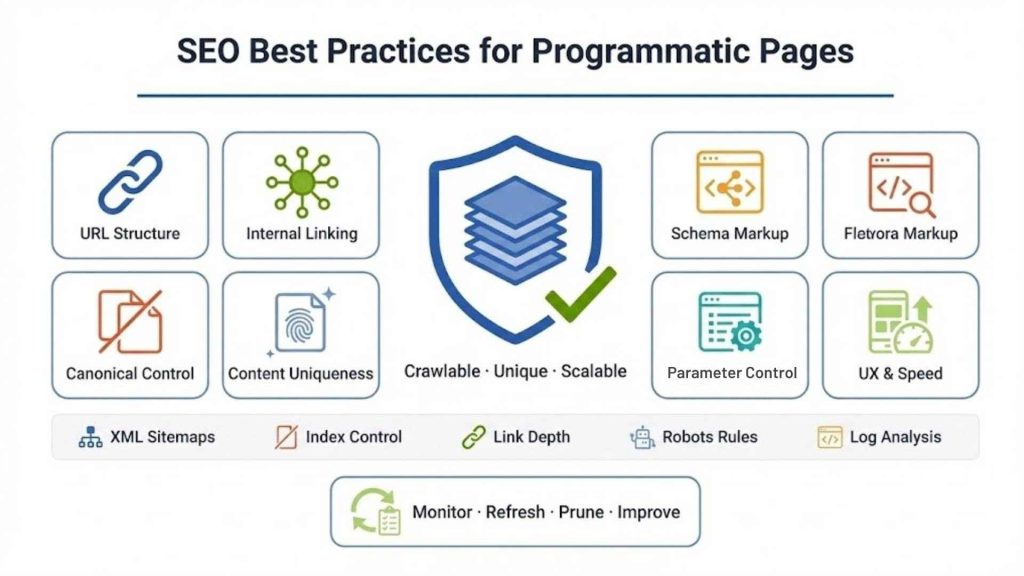

SEO Best Practices for Programmatic Pages

I’ve seen strong programmatic SEO systems get crushed because of technical oversights, duplicate content, bad linking logic, and poor crawlability. These issues don’t just hurt rankings; they destroy trust in the system you’ve built.

The good news is that most of these problems are preventable. You just have to bake best practices into your architecture from the start.

Let me break down the areas that matter most.

URL Structure: Clean, Predictable, and Query-Aligned

Your URLs should be:

- Short

- Human-readable

- Keyword-rich

- Consistent across the pattern

I use this simple rule: if you can’t guess what the page is about from the URL alone, it’s too complicated.

Examples:

✅ /tools/zapier-alternatives

✅ /sneakers/nike-men-black-size-11

❌ /page.php?id=8320&src=gpt_batchIf your site structure involves nested paths, make sure they mirror your data hierarchy:

/cities/new-york/restaurants

/products/electronics/laptops/dellMost importantly, use the primary keyword in the slug. This still affects rankings and CTR.

Internal Linking: The Backbone of Discoverability

Google discovers your pages through links. If you generate thousands of pages but fail to link to them, they might never get crawled, much less ranked.

My internal linking strategy has three layers:

1. Category → Page

From your high-authority “hub” pages, link down into the programmatic set. These links can be dynamically inserted:

- Lists of cities, tools, categories, etc.

- Tag-based modules

- “Related pages” widgets

2. Page → Category

Each programmatic page should link back up to its category. That way, juice flows in both directions.

3. Peer-to-Peer

This is where most people stop. I also crosslink between pages that share attributes. For example:

- “Zapier Alternatives” links to “IFTT Alternatives”

- “Wedding venues in Austin” links to “Wedding planners in Austin”

You can even automate these relationships using shared tags or fields in your dataset.

Schema Markup: Structured Data at Scale

When you’re generating thousands of pages, you have a unique opportunity: you can implement schema programmatically, too.

Based on your template type, here’s what I typically use:

| Page Type | Recommended Schema |

| Location / service pages | LocalBusiness, PostalAddress |

| Location/service pages | Product, AggregateRating |

| SaaS integration pages | SoftwareApplication |

| FAQs | FAQPage, Question, Answer |

| Listicles | ItemList |

I populate schema fields from the same data source used to build the page. This keeps everything aligned and maintainable.

Bonus: I validate schema with Google’s Rich Results Test in batch mode during QA crawls.

Canonical Tags and Duplicate Content

One of the biggest risks in programmatic SEO is accidental duplication. This often happens when:

- Multiple pages target similar queries with only minor differences

- URLs can be generated dynamically from filters (e.g., query parameters)

- You have inconsistent casing or slugs (/Zapier vs /zapier)

My safeguards:

- Every page has a self-referencing canonical tag

- I use canonical tags to de-duplicate filtered or paginated views

- I block unnecessary variants via robots.txt or meta noindex

- I regularly run similarity analysis using tools like Siteliner or Copyscape

Also: I consolidate content templates. If two query patterns are 90% semantically overlapping, I merge them into a single template and use variables to adjust tone or structure.

Content Uniqueness and Anti-Spam Controls

You don’t need 100% unique copy on every page, but you do need uniqueness in value.

Here’s how I build that in:

- Vary intros with GPT using controlled randomness (but stable structure)

- Include data or features unique to each row (e.g., “5 best venues in Austin” vs. “7 best venues in Houston”)

- Pull in user-generated data (reviews, ratings, questions) if available

- Use conditional logic to tailor copy sections based on data values

I also set up alerts or crawlers that flag:

- Pages with fewer than X words

- Pages with over 85% text similarity to others

- Pages with missing key elements (e.g., image, CTA, data block)

This lets me kill or rework low-quality pages before Google does.

Mobile and UX Optimization at Scale

At this scale, a design bug is not just annoying, it becomes 10,000 broken pages. I test all templates in:

- Chrome mobile emulator

- Safari iOS

- Low-end Android devices

And I check:

- Are cards and tables scrollable?

- Are CTAs accessible?

- Does text wrap correctly in all viewports?

One overlooked thing: page speed. Because programmatic pages often pull in dynamic data or large lists, they can get bloated fast. I audit them with:

- Lighthouse

- PageSpeed Insights

- WebPageTest

If anything falls below a 70 performance score, I dig into it.

Crawl Budget and Index Management

If Google can’t crawl your site efficiently, it won’t index your pages. And if you let it index garbage, it won’t trust your site.

Here’s what I do:

- Submit dynamic XML sitemaps for each page type (chunked to <50k URLs)

- Monitor index coverage in Search Console

- Use log file analysis (if possible) to see what’s being crawled

- Use meta noindex and robots.txt to block junk (sorts, filters, etc.)

Most importantly: I optimize internal link depth. Every programmatic page should be within 2–3 clicks from the homepage or a crawlable hub.

Ongoing Maintenance and Content Refresh

You don’t get to “set and forget.” I build automated systems to:

- Flag pages with declining traffic

- Refresh GPT-generated content every 6–12 months

- Re-pull or update data if it’s price/inventory dependent

- Swap out listings or examples based on performance

In many systems, I add a “last updated” field and expose that on-page for freshness. I’ve seen this improve CTR and trust.

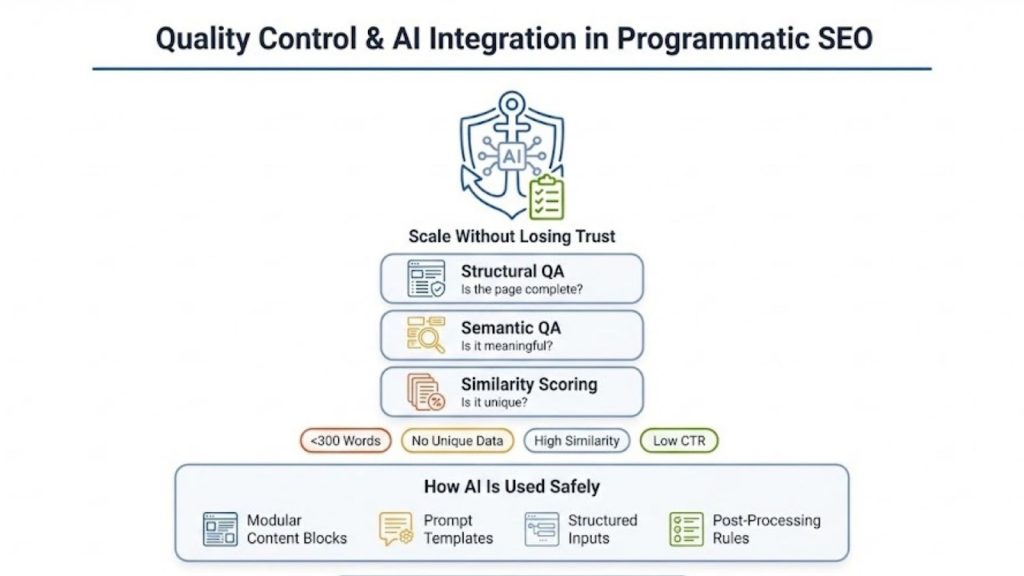

Quality Control, Thin Content, and AI Integration at Scale

When you’re generating pages programmatically, especially with AI, you walk a fine line between scalable value and mass-produced noise. Google has made it clear: it doesn’t care if content is human- or machine-written, as long as it’s helpful.

But if your programmatic system starts spitting out thin, repetitive, or manipulative content, you’re not just wasting resources. You’re actively hurting your site’s trust and visibility.

Let’s break this into two parts:

- How I enforce content quality control at scale

- How I integrate AI (especially GPT) into workflows safely and effectively

Quality Control in a Programmatic SEO System

You need quality gates, multiple ones. I never let a programmatic system go live without at least three layers of QA:

1. Structural QA (Is the page complete?)

This ensures every generated page contains:

- A valid H1

- A populated intro

- A unique meta title and description

- At least one internal link

- At least one content section with data

I automate checks for this using:

- Airtable filters (for missing fields)

- Screaming Frog (for missing tags)

- Custom crawlers or APIs

2. Semantic QA (Is the page meaningful?)

Not every page deserves to exist, even if it technically renders. I use logic like:

- Does this city have enough listings to warrant a page?

- Does this keyword variation have search volume?

- Is the data block empty or generic?

If a page is weak, I:

- Exclude it from generation

- Merge it into a parent category

- Redirect it to a higher-value equivalent

3. Similarity Scoring

I calculate a similarity score between pages based on:

- Intro paragraph

- Section headings

- Page structure

Pages with >85% text similarity get reviewed or dropped.

If you’re running a system with AI-generated content, similarity scoring becomes critical. Otherwise, you risk unintentional duplication, even with GPT involved.

Avoiding Thin Content

Thin content is any page that adds no unique value. It might be:

- Too short

- Too generic

- Too similar to other pages

- Lacking data, insights, or relevance

I use these thresholds:

- <300 words = flag

- No unique data blocks = flag

- Duplicate listings across multiple pages = flag

- CTR from search <0.5% = flag

Then I either:

- Kill the page

- Redirect it

- Regenerate it with improved prompts or more data

Remember: the total number of pages means nothing if 80% are low quality. It’s better to have 2,000 valuable pages than 20,000 index bloat.

Integrating GPT and AI in Programmatic SEO

Now let’s talk about generative AI. Yes, GPT is a powerful tool, but most teams misuse it in programmatic SEO.

Here’s how I use it responsibly and effectively:

Use AI for Content Blocks, Not Entire Pages

I rarely generate full pages with GPT. Instead, I generate modular elements:

- Custom intro paragraphs tailored to variables (e.g. “Zapier alternatives for small businesses”)

- Meta descriptions (with fallbacks)

- Summaries or product blurbs

- Comparison points pulled from structured data

This approach gives me more control, higher quality, and fewer hallucinations.

Use Prompt Engineering for Contextual Accuracy

I don’t just feed GPT a location and ask for a city guide. I build prompts like:

“Write a 2–3 sentence introduction for a page listing the best CRM software for real estate agents. Use a confident, professional tone. Highlight the importance of lead tracking and contact segmentation.”

I also pass context as system instructions, such as:

- Brand voice

- Page structure

- Known data fields

This drastically improves the consistency of output.

Fine-tuning or Prompt Templates

If you’re generating thousands of variations, you’ll want:

- A few tested prompt templates (by use case)

- Optional fine-tuning for brand style or product phrasing

- Output checks via regex or logic formulas

I’ve even built systems where GPT outputs go through a post-processor that:

- Strips fluff

- Checks for keyword presence

- Enforces paragraph length

What I Avoid with GPT

- Generating content without structured inputs

- Letting GPT make up features or benefits

- Relying on GPT for factual accuracy (always pair with data)

- Publishing anything without sampling and QA

GPT is a force multiplier. But if you use it blindly, it becomes a liability.

Human Oversight Is Not Optional

Even with great prompts and logic, I still spot-check every template batch before publishing. You wouldn’t deploy code without QA, and GPT-generated content is no different.

I review:

- 5–10 pages per content type

- Examples from edge cases (e.g., low data volume, unusual variable combinations)

- Any outliers flagged by similarity or word count checks

This lets me catch issues before Google does.

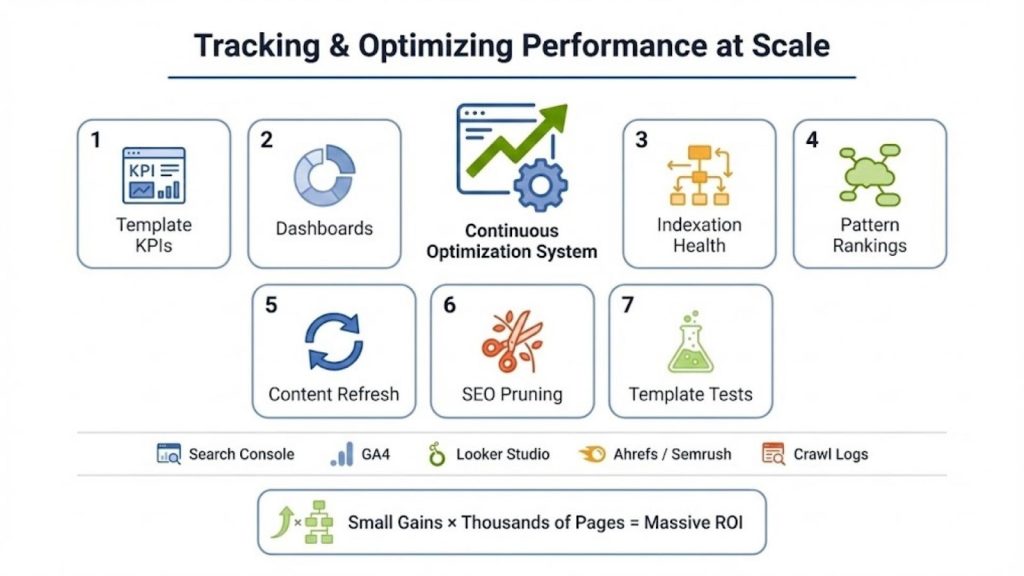

Tracking and Optimizing Performance at Scale

The most successful programmatic SEO systems aren’t just well-built. They’re well-managed. I’ve seen sites explode in traffic after launch, not because they published more pages, but because they iterated intelligently on what was already live.

To do that, you need systems for monitoring, analysis, and continuous optimization.

Step 1: Define Success Metrics by Template

Every page set should have clear KPIs, and those KPIs should match the search intent the template was designed for.

Here’s how I break it down:

| Template Type | Primary Metric | Secondary Metric |

| Product list pages | Organic sessions | CTR, time on page |

| Location/service pages | Clicks to partner/CTA links | Bounce rate, scroll depth |

| Location / service pages | Local conversion (leads, calls) | Geo-CTR, bounce rate |

| Integration / SaaS pages | Clicks to signup/integration | Engagement, link clicks |

Every page generated from a template inherits that KPI focus. This allows me to compare performance horizontally, across 100s or 1,000s of pages, and identify winners and losers by structure, not by individual title.

Step 2: Create Segmented Dashboards

I use Looker Studio (Google Data Studio) with connectors like:

- Google Search Console (via API)

- GA4 (for engagement/conversions)

- Airtable (for metadata)

My dashboards are segmented by:

- Page template (e.g., all /tools/* or /cities/*)

- Content cohort (e.g., batch 1 vs. batch 2)

- Page age (e.g., <30 days, 30–90 days, etc.)

- Keyword pattern (e.g., “best X for Y” vs. “X vs Y”)

This gives me visibility into:

- Which templates are driving traffic

- Which patterns are underperforming

- How performance changes over time

I also track the impression-to-index ratio, to see if Google is struggling to surface certain page types.

Step 3: Monitor Indexation and Crawlability

Even if you build great pages, Google might not index them, especially if your internal linking is weak or content is thin.

Here’s what I monitor:

- Pages submitted vs indexed (from Search Console)

- Crawl stats from log files (if available)

- URL discovery from XML sitemaps

- Indexing delays by page template

If I see that a certain template has <50% indexation after 30 days, that tells me something’s off, maybe thin content, duplicate patterns, or weak links.

Tools I use:

- Screaming Frog (with Search Console + GA integrations)

- Sitebulb (for crawl graphs and depth analysis)

- JetOctopus or Oncrawl (for large-scale log analysis)

Step 4: Track Rankings by Keyword Pattern

I don’t track individual keywords. Instead, I group them by intent and template:

- “best [product] for [use case]”

- “[tool] alternatives”

- “[service] in [location]”

Then I use Ahrefs or Semrush to monitor performance by group. If I see “alternatives” pages performing 5x better than “best for X” pages, I investigate:

- Is the query more commercial?

- Is the template better optimized?

- Is there more competition in one cluster?

This level of insight lets me refine copy, page structure, and internal linking by pattern, not just by guesswork.

Step 5: Continuous Content Refresh

Every few months, I run a batch refresh cycle. It’s especially important if:

- You’re using AI-generated intros or summaries

- Product listings or data feeds change regularly

- The queries are freshness-sensitive (e.g., pricing, tools, offers)

Here’s what gets refreshed:

- Content blocks (GPT re-runs with new context)

- Meta titles (if CTR < 1%)

- Listings or data tables (auto-synced or re-scraped)

- FAQ or supporting content

I usually mark the “last updated” date dynamically using the updated_at field in Airtable or CMS, and expose that in schema and on-page.

Step 6: Deindex, Redirect, or Consolidate Underperformers

Not every programmatic page is worth keeping forever. If a page:

- Has 0 clicks in 90 days

- Isn’t indexed after multiple fetches

- Gets <0.2% CTR

- Has thin or low-value content

…then I either:

- Deindex it (meta noindex)

- Redirect to a better-performing sibling

- Merge into a parent or category page

This protects your crawl budget and ensures your site remains high-signal. Think of it as SEO pruning, absolutely necessary at scale.

Step 7: Run Experiments at the Template Level

Because every page in a programmatic system shares a template, I can run A/B-style experiments at the layout level.

For example:

- Change H1 format from “Best X for Y” → “Top 10 X Tools for Y.”

- Add CTA block after paragraph 2

- Swap list layout from grid → table

Then I compare:

- CTR in GSC (impressions vs clicks)

- Session duration and bounce

- Conversion metrics (signups, clicks)

Even a 0.5% lift across 5,000 pages = hundreds of incremental leads or conversions per month. Multiply that over time, and it becomes one of your highest-leverage activities.

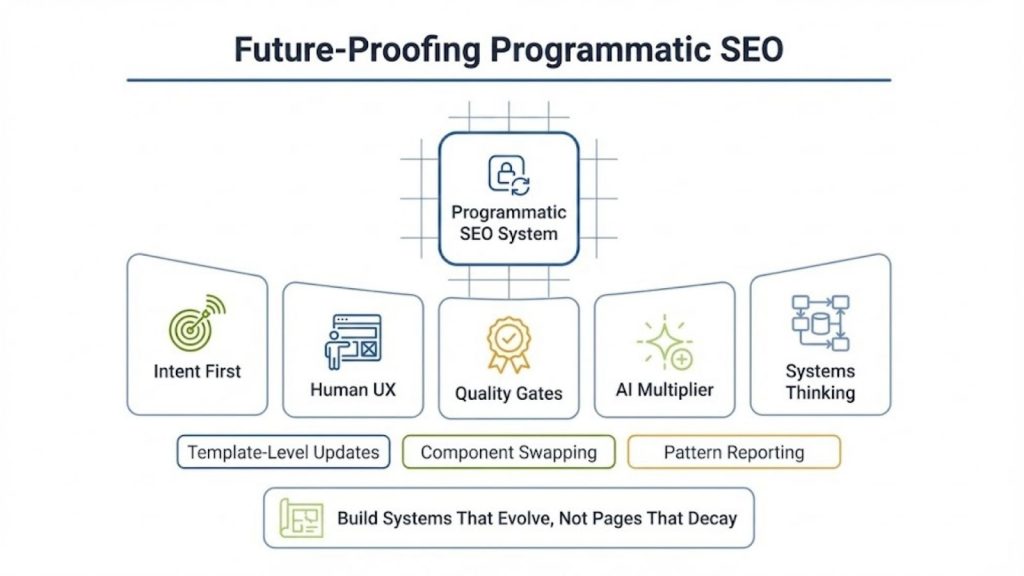

Future-Proofing Your Programmatic SEO Strategy

If you’re playing the long game, and you should be, here’s how I future-proof my systems.

1. Build Around Intent, Not Just Volume

Chasing search volume feels good on a spreadsheet, but it’s not where modern SEO wins are coming from. Most of the conversion-ready traffic lives in low-volume, high-intent queries, the kind Google increasingly prioritizes.

For example, a page targeting “best CRM for legal firms with HIPAA compliance” may only show 30 searches/month in traditional tools. But it converts 10x better than a head-term like “best CRM.”

So I map out intent-rich keyword patterns first, “X for Y,” “X alternatives for Z,” “how to do X with Y,” then plug those into scalable templates. This ensures that what I’m building has enduring business value, not just rank-chasing appeal.

The algorithm may change, but user problems won’t.

2. Design for Human Use First

Too many programmatic pages are technically crawlable but practically useless. If I had to summarize 90% of failed programmatic SEO projects, I’d say this:

They were built for robots, not readers.

So I design every template for human experience:

- Clean structure: Headings, sections, and content hierarchy that makes sense at a glance

- Snappy load times: Because bloated templates scale slow pages to the thousands

- Useful content: Real answers, real data, real clarity, not just fluff

I assume someone will land on that page from Google, read it in 10 seconds, and decide whether to trust the brand. That lens changes everything.

3. Control for Quality, Not Just Quantity

It’s easy to get caught in the “page count” game, especially when you see competitors bragging about how they pushed out 20,000 URLs in a sprint.

But I’ve learned the hard way: every page you publish is a vote for or against your site’s credibility in Google’s eyes.

That’s why I build in multiple layers of quality control:

- Programmatic QA to catch incomplete or broken templates

- Similarity scoring to avoid content redundancy

- Performance rules to automatically deindex or consolidate poor performers

I’d rather launch 2,000 excellent pages than 10,000 mediocre ones. And when I do scale, I scale with confidence, not with cleanup costs.

4. Use AI as a Multiplier, Not a Crutch

I love using GPT and other LLMs, but only as strategic accelerators. They help me:

- Scale personalized content blocks

- Vary intros based on input context

- Condense structured data into readable summaries

But they don’t replace strategy, human tone, or truth.

I never let GPT run wild. Every prompt is carefully engineered, tested across edge cases, and monitored post-deployment. And if content is meant to build authority, trust, or expertise, I keep it human-written or tightly reviewed.

Future algorithm updates will continue targeting helpfulness, authenticity, and precision. Letting AI do too much, too blindly, is how you end up in Google’s crosshairs.

5. Think in Systems, Not Pages

This one’s foundational.

Programmatic SEO is not about building pages, it’s about building a system that creates, maintains, and evolves thousands of assets like software.

So I ask:

- Can I update all intros with one prompt tweak?

- Can I refresh all CTAs or schema in a single logic pass?

- Can I split-test templates at scale and measure impact?

If not, I’m not building for the long haul, I’m building a maintenance nightmare.

Every part of your system, from CMS architecture to content generation workflows, should support iteration at scale. That’s the only way you stay agile in a world where both user behavior and search engine rules evolve faster than your roadmap.

Bottom line: SEO is no longer about pages. It’s about systems. The teams that win in the next five years will be the ones who treat SEO like engineering, not like copy-pasting blog posts into a CMS.

Frequently Asked Questions (FAQ)

How long does it take for programmatic SEO pages to rank?

It depends on your domain authority, internal linking, content quality, and crawl efficiency. On average, we start seeing meaningful impressions within 2–4 weeks post-indexation for well-built systems. Competitive queries or new domains can take several months.

Can programmatic SEO work for smaller websites or startups?

Yes, if done right. Smaller sites can start with a few dozen or hundred pages focused on a niche. The key is to build value-dense templates and avoid the temptation to scale prematurely. MVP-style programmatic SEO can be powerful for startups with clear positioning.

What are the risks of using AI-generated content at scale?

The biggest risks are:

- Duplicate or near-duplicate content

- Factual inaccuracies or hallucinations

- Off-brand tone

- Low helpfulness signals (as judged by Google)

Mitigating these requires tight prompt engineering, strong QA pipelines, and careful human review. GPT is a tool, not a substitute for editorial strategy.

How do I structure internal linking if my programmatic pages span multiple categories or geographies?

Use a multi-layered approach:

- Crosslink by shared attribute (e.g., same category, same city)

- Build hub pages that group related entities (e.g., “Top Marketing Tools” → “Zapier Alternatives”, “HubSpot Integrations”)

- Link dynamically within copy using named entities and tags

This not only supports crawlability but also adds context and relevance for users and search engines alike.

How do I prevent thin or duplicate content when generating thousands of pages?

Build in:

- Similarity scoring

- Word count thresholds

- Template-level logic (conditional copy, modular blocks, variable depth)

- Unique data points per page (reviews, numbers, ratings, prices, etc.)

Always test content variation across edge cases, pages with very little data, ambiguous variables, or overlapping topics.

What’s the best way to measure SEO ROI from programmatic pages?

Track ROI by template or pattern, not just page.

- Organic traffic segmented by template

- Conversion metrics tied to CTAs or goal completions

- Indexed vs. non-indexed page ratio

- Keyword clusters (e.g., “best for X”, “X alternatives”) performance over time

Look for velocity and compounding growth, not just instant rankings.

Should I host programmatic SEO pages on a subdomain or the main domain?

Almost always, the main domain is preferable. Google historically treats subdomains more like separate entities, which means:

- Slower authority transfer

- Separate indexing signals

- Harder to integrate internal linking effectively

Use subdomains only if technically necessary (e.g., legacy CMS separation, legal reasons).

How does RiseOpp help with programmatic SEO specifically?

RiseOpp provides strategic oversight and execution support across:

- Programmatic keyword mapping

- Data infrastructure planning

- AI-powered content generation

- Custom internal linking frameworks

- QA systems and indexation monitoring

We blend our Heavy SEO methodology with modern automation tools and a Fractional CMO-level strategy to ensure you’re not just ranking pages, you’re growing intelligently.

Final Thoughts

Programmatic SEO isn’t a shortcut; it’s a discipline. When you do it right, it becomes a compounding growth engine that no blog strategy can match.

You’re building infrastructure that scales:

- Across keywords

- Across content types

- Across time

If you’re serious about executing this, build slow, test early, and optimize forever.

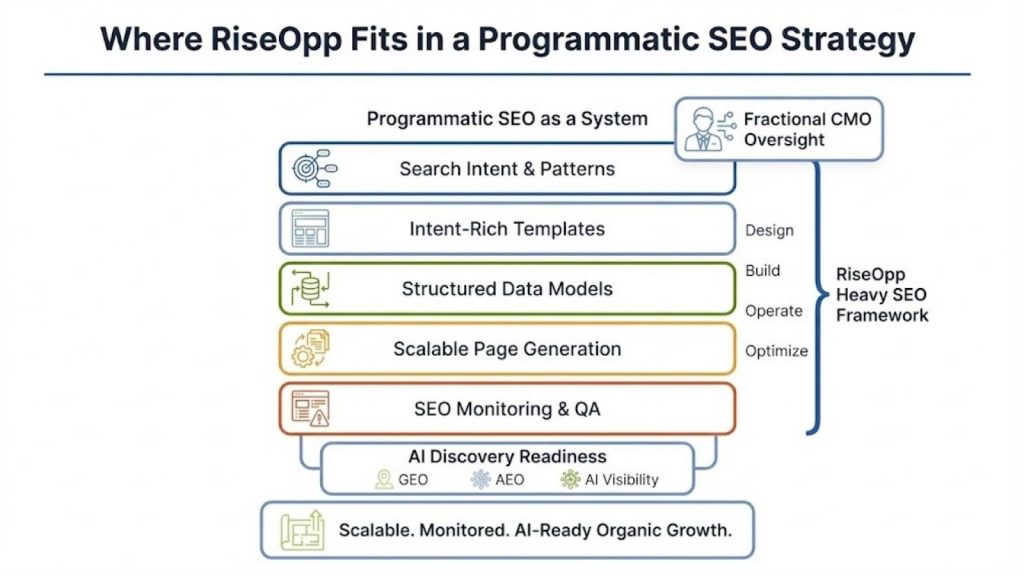

Where RiseOpp Fits Into a Programmatic SEO Strategy

Programmatic SEO only works when strategy, systems, and execution are tightly aligned. Templates without intent fall flat. Automation without oversight creates risk. Scale without monitoring erodes trust.

Most teams don’t fail because the concept is flawed; they fail because the infrastructure behind it is incomplete.

At RiseOpp, we approach programmatic SEO as an engineered growth system, not a content shortcut.

Our proprietary Heavy SEO methodology is built specifically for this kind of scale. We work with companies to:

- Identify defensible, long-tail keyword patterns

- Design intent-rich, user-first page templates

- Model clean, structured datasets

- Build automated pipelines that produce thousands of SEO-ready pages without sacrificing quality

And we don’t stop at deployment. Every system we implement is supported by ongoing SEO monitoring, so we surface and solve issues with indexation, crawlability, and performance before they become liabilities.

As search shifts toward AI-driven discovery, we extend our systems beyond classic rankings. Our work in GEO, AED and AIVO ensures that programmatically generated pages are not just crawlable, but structured and semantically clear for reuse in AI Overviews, answer boxes, and LLM-powered assistants. This means your content can earn visibility not just in Google Search, but in the future of AI-driven discovery.

For companies that need experienced leadership to drive all of this, our Fractional CMO services provide senior ownership across SEO, content systems, marketing team building, and revenue-aligned strategy. We operate as a true extension of your executive team, connecting programmatic SEO with broader growth, positioning, and go-to-market goals.

If you’re serious about building a scalable, future-proof organic growth engine, the next step isn’t pushing more pages live. It’s designing the right system, and doing it with clarity, discipline, and long-term leverage.

If you’d like to explore how this framework applies to your business, the most productive next step is a strategic conversation, focused on your search landscape, your data, and where scale will actually pay off.

Comments are closed